Artificial Intelligence dominion is undergoing a remarkable transformation. What began as basic language models has evolved into sophisticated AI agents capable of autonomous decision-making and complex task execution. Let’s explore this fascinating journey and peek into the future of AI agency.

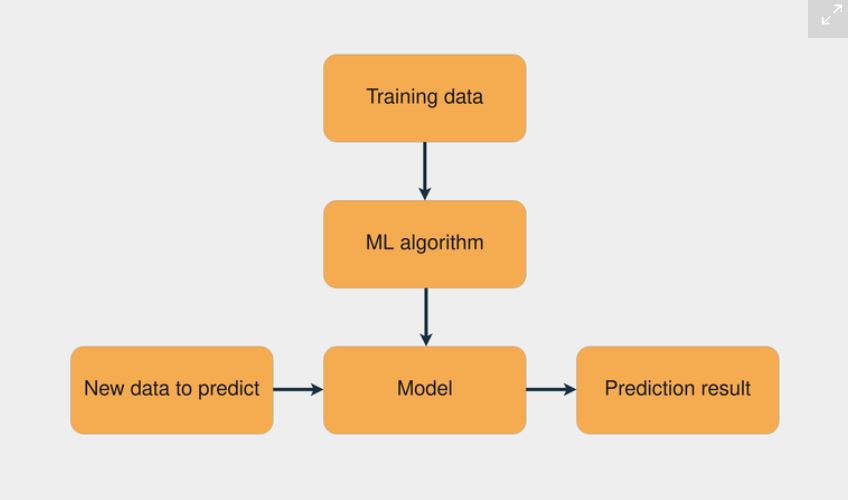

The Foundation: Large Language Models

The story begins with the fundamental building blocks – Large Language Models (LLMs). These transformer-based architectures represented the first generation of modern AI systems, processing text inputs and generating text outputs. While revolutionary, they were constrained by their simplicity: they could only engage in text-based conversations within their training data’s boundaries.

Breaking the Context Barrier

As these systems matured, a significant limitation became apparent – the restricted context window. Early models could only process around 8,000 tokens at a time, severely limiting their ability to handle lengthy documents or maintain extended conversations. This led to the development of architectures with expanded context windows, marking the first major evolutionary step toward more capable systems.

The RAG Revolution

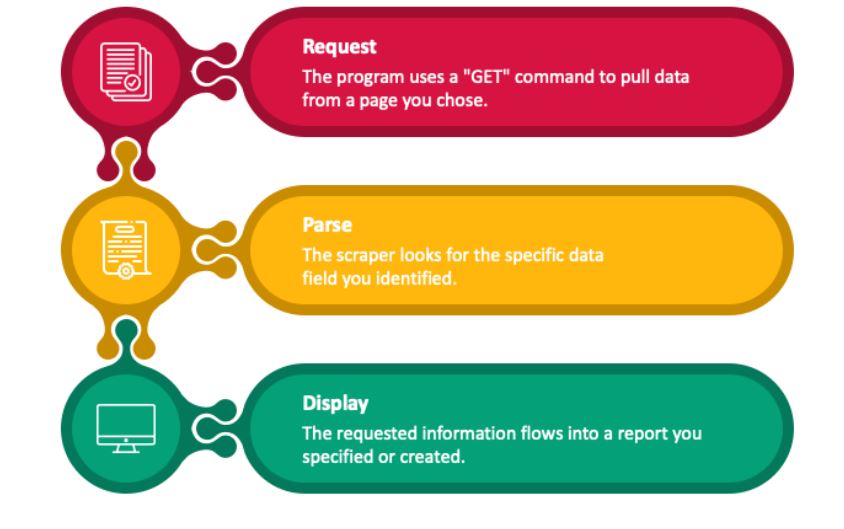

The introduction of Retrieval-Augmented Generation (RAG) represented a quantum leap in AI capability. By combining LLMs with external knowledge bases, these systems could now access and process current information, dramatically improving their ability to provide accurate, up-to-date responses. The integration of tool use, such as search APIs, further enhanced their utility, allowing them to gather real-time data and perform specific tasks.

The Multi-Modal Breakthrough

The next evolutionary step brought multi-modal capabilities to the forefront. AI systems could now process and generate content across different formats – text, images, and even video. This development laid the groundwork for what we now recognize as modern AI agents, creating systems that could interact with the world in ways that more closely mirror human capabilities.

The Current State: Memory-Enabled Agents

Today’s AI agents represent a sophisticated convergence of multiple technologies. They incorporate three distinct types of memory:

- Short-term memory for immediate context

- Long-term memory for persistent knowledge

- Episodic memory for experiential learning

These systems leverage both vector databases for efficient information retrieval and semantic databases for understanding complex relationships. Perhaps most importantly, they incorporate decision-making capabilities through frameworks like ReACT, allowing them to adjust their approach when initial attempts fail.

Future Architecture: A New Paradigm

Looking ahead, the architecture of AI agents is poised for another revolutionary shift. The future framework emphasizes:

Input Layer Sophistication

The systems will process multiple data types simultaneously while maintaining real-time data integration and adaptive feedback loops. This creates a more dynamic and responsive interaction model.

Advanced Orchestration

Future agents will excel at resource management, featuring sophisticated inter-agent communication and real-time performance optimization. This orchestration layer will enable multiple agents to work together seamlessly.

Enhanced Core Capabilities

The core of future agents will incorporate strategic planning, self-reflection, and continuous learning loops. Multiple specialized models will work in harmony, each handling specific aspects of complex tasks.

Innovative Data Architecture

The future of data management in AI agents will combine structured and unstructured data storage with advanced vector stores and knowledge graphs, enabling more sophisticated reasoning and relationship mapping.

Output Sophistication

The response mechanisms will become more adaptive, offering customizable formats and multi-channel delivery systems, along with automated insight generation.

The Human Element

Perhaps most importantly, future architecture emphasizes human-AI collaboration. This includes robust safety controls, ethical considerations, and regulatory compliance measures. The focus on interoperability and systematic improvement tracking ensures that these systems remain both powerful and responsible.

Industry Movement

Major players like OpenAI are already moving in this direction. Sam Altman’s recent Reddit AMA highlighted the company’s focus on agentic development, including plans for converging different LLMs for various purposes and creating more autonomous workflows for their systems.

Conclusion

The evolution of AI agents represents one of the most significant technological progressions of our time. From simple text-processing models to sophisticated autonomous systems, each iteration has brought new capabilities and possibilities. As we look toward the future, the emphasis on safety, ethics, and human collaboration suggests that these systems will become not just more powerful, but also more responsible and beneficial to society.

The next generation of AI agents won’t just be incrementally better – they’ll be fundamentally different, combining advanced capabilities with robust safety measures and ethical considerations. This evolution promises to accelerate development across various fields while maintaining human values at its core.

The post The Evolution of AI Agents: From Simple LLMs to Autonomous Systems appeared first on Datafloq.