While historically, there are always misunderstandings about a new technology or methodology, it seems to be even worse when it comes to generative AI. This is in part due to how new generative AI is and how fast it has been adopted. In this post, I’m going to dive into one aspect of generative language applications that is not widely recognized and that makes many use cases I hear people targeting with this toolset totally inappropriate.

A Commonly Discussed Generative AI Use Case

Text based chatbots have been around for a long time and are now ubiquitous on corporate websites. Companies today are now scrambling to use ChatGPT or similar toolsets to upgrade their website chatbots. There is also lots of talk about voice bots handling calls by reciting the text generated in answer to a customer’s question. This sounds terrific, and it is hard not to get excited at first glance about the potential of such an approach. The approach has a major flaw, however, that will derail efforts to implement it.

Let’s first look at the common misunderstanding that makes such use cases inappropriate and then we can discuss a better, more realistic solution.

Same Question, Different Answers!

I’ve written in the past about how all generative AI responses are effectively hallucinations. When it comes to text, generative AI tools literally generate answers word by word using probabilities. People are now widely aware that you can’t take an answer from ChatGPT as true without some validation. What most people don’t yet realize is that, due to how it is configured, you can get totally different answers to the exact same question!

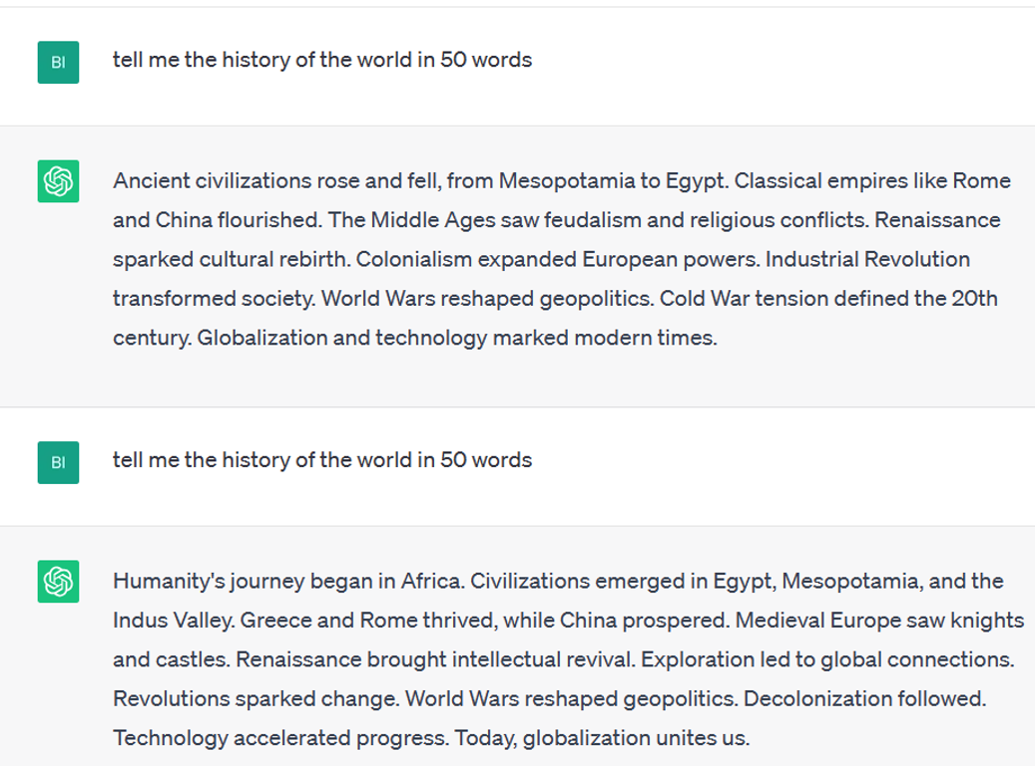

In the image below, I asked ChatGPT to “Tell me the history of the world in 50 words”. You can see that while there are some similarities, the two answers are not nearly the same. In fact, they each have some content not mentioned in the other. Keep in mind that I submitted the second prompt literally as soon as I got my first answer. The total time between prompts was maybe 5 seconds. You may be wondering, “How can that be!?” There is a very good and intentional reason for this inconsistency.

Injecting Randomness Into Responses

While ChatGPT generates an answer probabilistically, it does not literally pick the most probable answer. Testing showed that if you let a generative language application always pick the highest probability words, answers will sound less human and be less robust. However, if you were to force only the highest probability words you would, in fact, get exactly the same answer every time for a given prompt.

It was found that choosing from among a pool of the highest probability next words will lead to much better answers. There is a setting in ChatGPT (and competing tools) that specifies how much randomness will be injected into answers. The more you desire a factual answer to a question, the less randomness is desired because the best answer is preferred. The more creativity desired, such as creating a poem, the more randomness should be allowed so that answers can drift in unexpected ways.

The key point, however, is that injecting this randomness takes what are already effectively hallucinated answers and makes them different every time. In most business settings, it isn’t acceptable to have an answer generated each time a given question is asked that is both different and potentially flawed!

Forget Those Generative AI Chatbots

Now let’s tie this all together. Let’s say I’m a hotel company and I want a chatbot to help customers with common questions. These might include questions about room availability, cancellation policy, property features, etc. Using generative AI to answer customer questions means that every customer can get a different answer. Worse, there is no guarantee that the answers are correct. When someone asks about a cancellation policy, I want to provide the verbatim policy itself and not generate a probabilistic answer. Similarly, I want to provide actual room availability and rates, not probabilistic guesses.

The same issue arises when asking for a legal document. If I need legal language to address ownership of intellectual property (IP), I want real, validated language word for word since even a single word change in a legal document can have big consequences. Using generated language for IP protection as-is with no expert review is incredibly risky. The generated legalese may sound great and may be mostly accurate, but any inaccuracies can have a very high cost.

Use An Ensemble Approach To Succeed

Luckily, there are approaches already available that will avoid the issues with the inaccuracy and inconsistency of generative AI‘s text responses. I wrote recently about the concept of using ensemble approaches and this is a case where an ensemble approach makes sense. For our chatbot, we can use traditional language models to diagnose what question a customer is asking and then use traditional searches and scripts to provide accurate, consistent answers.

For example, if I ask about room availability, the system should check the actual availability and then respond with the exact data. There is no information that should be generated. If I ask about a cancellation policy, the policy should be found and then provided verbatim to the customer. Less precise questions such as “what are the most popular features of this property” can be mapped to prepared answers and delivered much in the way a call center agent uses a set of scripted answers for common questions.

In our hotel example, generative AI isn’t needed or appropriate for the purpose of helping customers answer their questions. However, other types of models that analyze and classify text do apply. Combined with repositories that can be accessed once a question is understood to find the answer will ensure consistent and accurate information is provided to customers. This approach may not be using generative AI, but it is a powerful and valuable solution for a business. As always, don’t focus on “implementing generative AI” but instead focus on what is needed to best solve your problem.

Originally posted in the Analytics Matters newsletter on LinkedIn

The post No, That Is Not A Good Use Case For Generative AI! appeared first on Datafloq.