Last time I blogged about the New WAL Archive Module/Library feature available in PostgreSQL 15, which is quite transformative in how WALs are archived today in PostgreSQL. PostgreSQL 15 has many more improvements related to WAL archiving, which is worth discussing. In this blog, I would like to highlight some of them which solve great operational challenges for many of the PostgreSQL users.

Basics of WAL archiving

Let’s first discuss one of the problems that existed in PostgreSQL 14 and older.

When Postgresql generates WAL segment files in the pg_wal directory, it also generates associated

.ready

files in

pg_wal/archive_status

subdirectory.

For example,

$ ls -alrth pg_wal/ drwx------ 3 postgres postgres 68 Dec 7 05:47 . drwx------ 21 postgres postgres 32 Dec 21 03:54 .. -rw------- 1 postgres postgres 16M Dec 21 04:38 0000000200000008000000E4 -rw------- 1 postgres postgres 16M Dec 21 04:38 0000000200000008000000E5 -rw------- 1 postgres postgres 16M Dec 21 04:38 0000000200000008000000E6 -rw------- 1 postgres postgres 16M Dec 21 04:38 0000000200000008000000E7 drwx------ 2 postgres postgres 6 Dec 21 04:38 archive_status -rw------- 1 postgres postgres 16M Dec 21 04:38 0000000200000008000000E8 -bash-4.2$ ls -alrth pg_wal/archive_status/ total 5.0K drwx------ 3 postgres postgres 68 Dec 7 05:47 .. -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E4.ready -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E5.ready -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E6.ready -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E7.ready drwx------ 2 postgres postgres 6 Dec 21 04:38 .

This indicates that WAL segment files up to

0000000200000008000000E7

are ready for archiving, and the

0000000200000008000000E8

is the current WAL segment file which is not yet ready to be archived.

postgres=# SELECT pg_walfile_name(pg_current_wal_lsn()); pg_walfile_name -------------------------- 0000000200000008000000E8 (1 row)

Once the WALs are archived to the backup location (archive destination), the status changes to

.done

$ ls -alrth pg_wal/archive_status/ total 5.0K drwx------ 3 postgres postgres 68 Dec 7 05:47 .. -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E4.done -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E5.done -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E6.done -rw------- 1 postgres postgres 0 Dec 21 04:38 0000000200000008000000E7.done

PostgreSQL uses these status files to understand what are the WAL files pending for archiving.

PostgreSQL scans the directory

pg_wal/archive_status/

to understand the oldest WAL segment file that has not yet been archived.

PostgreSQL’s archiver process wakes up every 60 seconds (default) and tries to address every pending WAL segment by executing an internal function

pgarch_ArchiverCopyLoop();

Which in turn executes the

archive_command

for each of the WAL segment files. But the WAL archiving should happen in the right order and only for the remaining WAL segment files.

The decision on which WAL file to be handled next is decided by the function

pgarch_readyXlog()

. The following comments in the PostgreSQL code can tell the whole story.

/* * pgarch_readyXlog * * Return name of the oldest xlog file that has not yet been archived. * No notification is set that file archiving is now in progress, so * this would need to be extended if multiple concurrent archival * tasks were created. If a failure occurs, we will completely * re-copy the file at the next available opportunity. * * It is important that we return the oldest, so that we archive xlogs * in order that they were written, for two reasons: * 1) to maintain the sequential chain of xlogs required for recovery * 2) because the oldest ones will sooner become candidates for * recycling at time of checkpoint * * NOTE: the "oldest" comparison will consider any .history file to be older * than any other file except another .history file. Segments on a timeline * with a smaller ID will be older than all segments on a timeline with a * larger ID; the net result being that past timelines are given higher * priority for archiving. This seems okay, or at least not obviously worth * changing. */

The problem

But this function

pgarch_readyXlog() needs

to scan through the files in

pg_wal/archive_status/

for deciding which is the next candidate for archiving. So effectively each WAL file to be archived results in a full directory scan.

What if there are thousands or millions of files in

pg_wal/archive_status/

? This happens in a high transaction system where WAL archiving is not able to catch up with WAL generation in peak hours or if WAL arching is failing for some time. Once there are a huge number of

.ready

status files accumulated, the directory scans themselves will start taking more time. Effectively the chance of WAL arching to catch up becomes very glim.

If this happens in PG14 or older, the only solution is to try increasing wal_segment_size to a much bigger value, so that a lesser number of files will be generated. We had customer cases where we increased the

wal_segment_size

from the default 16MB to 1GB to address this problem. Of course, that’s a dirty workaround with obvious consequences like huge data loss if a backup need to be restored.

How PostgreSQL 15 solves this problem

Multiple solutions and patches are discussed with all possibilities. If you want to look into the full details, you can refer to the thread in the mailing list here:

The discussion converged into two approaches:

- Scan the directory and hold the result in an array and provide the same for archive_command or module. Even though this can drastically reduce the number of directory scans, the directory scans still happen, and associated O(n^2) complexity exists.

- A much smarter approach is to predict the next WAL segment file (based on the WAL filename format) and attempt to see the same in the directory. Directory scans can be avoided for the major part of the logic.

It was a very difficult decision on which approach should be taken. After weighing all the implications, the first approach, which holds the WAL segment filenames in an array, was selected, mainly because this array can be used for further improvement of sending multiple files to archive_command or module at a time, which is another major area which needs improvement.

How it works in PostgreSQL 15

The idea is to scan the archive_status directory with .ready files and accumulate the list of WAL files to be archived into an array the array size can be controlled using a constant definition at compile time.

/* * Maximum number of .ready files to gather per directory scan. */ #define NUM_FILES_PER_DIRECTORY_SCAN 64

So a directory scan will be done after 64 .ready files are handled.

Since it is very important to push the timeline history to the archive, it will be prioritized over the WAL segments. This is done by especially triggering a directory scan whenever there is a timeline switch.

Overall, 20x or more performance improvement is reported in the community

Better monitoring of WAL archiving

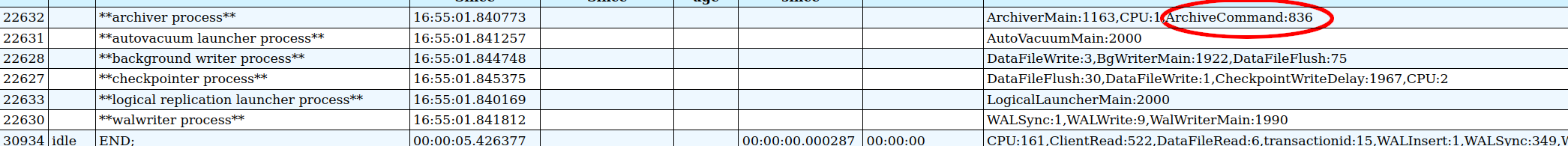

A new set of wait_events are added in PostgreSQL 15 for better observability and troubleshooting of WAL Archiving, Restore, and Cleanup phases.

| ArchiveCleanupCommand | Waiting for archive_cleanup_command to complete. |

| ArchiveCommand | Waiting for archive_command to complete. |

| RecoveryEndCommand | Waiting for recovery_end_command to complete. |

| RestoreCommand | Waiting for restore_command to complete. |

This wait event monitoring can tell us what the amount of time spent on specific actions is. For example, the wait event “ArchiveCommand” tells us that the shell command specified in “archive_command” is under execution.

Tools/Scripts like pg_gather can effectively utilize these waits to understand what percentage of time is spent on executing the archive_command and whether the speed of archive_command is a bottleneck in WAL archiving.

Thanks to Community! I want to acknowledge the great contributions, namely Robert Haas, who was the committer/coordinator of the improvement, Dipesh Pandit, whose work showed the way forward, and finally, Nathan Bossart, who gave the array approach and Dilip Kumar.

Thanks to Fujii Masao for the wait event patches.

Thanks to others who participated in discussions and reviews: Michael Paquier, Bharath Rupireddy, Andres Freund, Andrey Borodin, Dilip Kumar, Stephen Frost, Kyotaro Horiguchi, Jeevan Ladhe, and many others.