I wrote this post on MyRocks because I believe it is the most interesting new MySQL storage engine to have appeared over the last few years. Although MyRocks is very efficient for writes, I chose a more generic workload that will provide a different MyRocks use case.

I wrote this post on MyRocks because I believe it is the most interesting new MySQL storage engine to have appeared over the last few years. Although MyRocks is very efficient for writes, I chose a more generic workload that will provide a different MyRocks use case.

The use case is the TPC-C benchmark but executed not on a high-end server but on a lower-spec virtual machine that is I/O limited like for example, with AWS EBS volumes. I decided to use a virtual machine with two CPU cores, four GB of memory, and storage limited to a maximum of 1000 IOPs of 16KB. The storage device has performance characteristics pretty similar to an AWS gp2 EBS volume of about 330 GB in size. I emulated these limits using the KVM iotune settings in my lab.

<iotune> <total_iops_sec>1000</total_iops_sec> <total_bytes_sec>16384000</total_bytes_sec> </iotune>

MyRocks and RocksDB

If you wonder what is the difference between MyRocks and RocksDB, consider MyRocks as the piece of code, or the glue, that allows MySQL to store data in RocksDB. RocksDB is a very efficient key-value store based on LSM trees. MyRocks stores table rows in RocksDB using an index id value concatenated with the primary key as the key and then the internal MySQL binary row representation as the value. MyRocks handles indexes in a similar fashion. There are obviously tons of details but that is the main principle behind MyRocks. Inside MyRocks, there is an embedded instance of RocksDB running.

Dataset

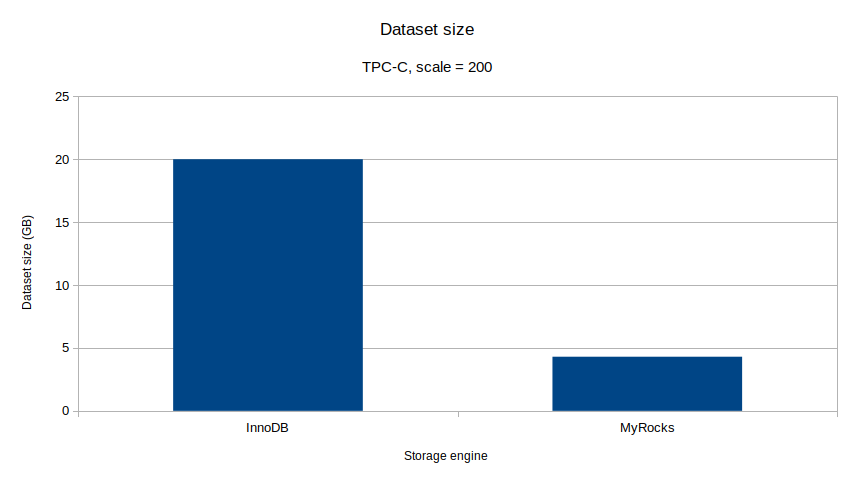

The TPC-C dataset I used was with a scale of 200. As seen in the figure below, the sizes of the dataset are very different using InnoDB vs MyRocks. While with InnoDB the size is 20GB, it is only 4.3GB with MyRocks. This is a tribute to the efficient compression capabilities of MyRocks.

InnoDB and MyRocks dataset sizes

A keen observer will quickly realize the compressed dataset size with MyRocks is roughly the same as the amount of memory of the virtual machine. This is not an accident, it is on purpose. I want to illustrate, maybe using an obvious use case, that you can’t use general rules like “InnoDB is faster for reads” or “MyRocks is only good for writes”. A careful answer would be: “it depends…”

TPC-C on MyRocks

In order to be able to run the sysbench TPC-C script, you need to use a binary collation and the read-committed isolation level. You must also avoid foreign key constraints. A typical sysbench invocation would look like this:

./tpcc.lua --mysql-host=10.0.4.112 --mysql-user=sysbench --mysql-password=sysbench --mysql-ssl=off \ --mysql-db=sysbench --threads=4 --scale=200 --use_fk=0 --mysql_storage_engine=rocksdb \ --mysql_table_options="COLLATE latin1_bin" --trx_level=RC --report-interval=10 --time=3600 run

I used a rocksdb_block_cache_size of 512MB. I wanted most of the memory to be available for the file cache, where the compressed SST files will be cached. The block cache just needs to be large enough to keep the index and filter blocks in memory. In terms of compression, the relevant settings in the column family options are:

compression_per_level=kLZ4Compression;bottommost_compression=kZSTD;compression_opts=-14:1:0

MyRocks uses ZStd:1 compression for the bottom level and LZ4 for the upper levels. The bottom-level compression is really critical as it contains most of the data.

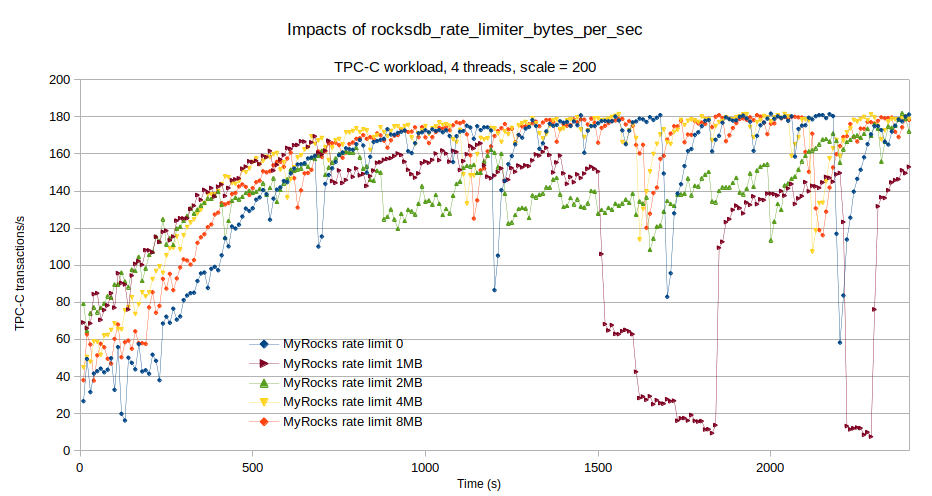

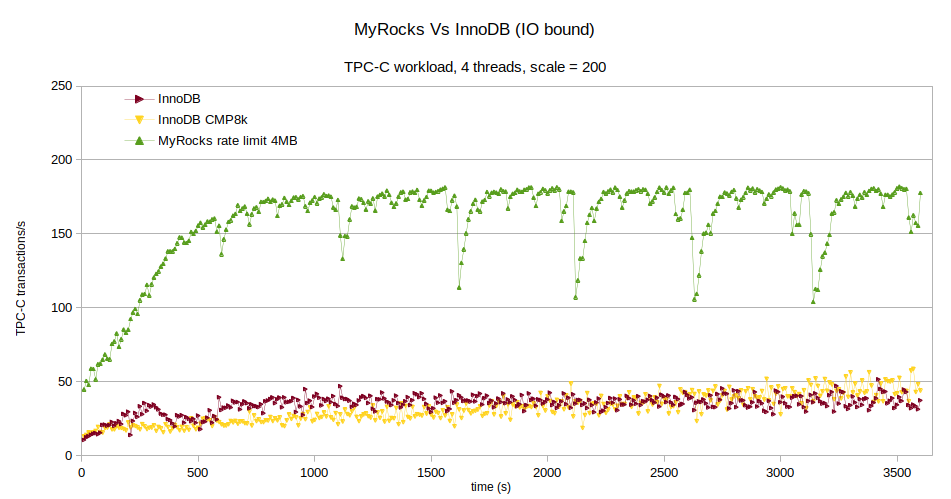

Being an LSM-type storage engine, RocksDB must frequently perform level compactions. Level compactions consume IOPs and in environments where IOPs are scarce, those impact performance. Fortunately, RocksDB has the variable rocksdb_rate_limiter_bytes_per_sec to limit the impacts of compaction. The IO bandwidth used by the background compaction threads is limited by this parameter. The following figure illustrates the impacts.

As the filesystem cache and the block cache warms up, the TPC-C transaction rates rise from 50 to around 175/s. After roughly 500s, the need for compaction arises and the performance drops. With no rate limit (0), the background threads consume too much IOPs and the compaction adversely affects the workload. With lower values of rocksdb_rate_limiter_bytes_per_sec, the impacts are reduced and the compactions are spread over longer periods of time.

For this environment, a rate limit of 4 MB/s achieves the lowest performance drops. Once warmed, the performance level never felt under 100 Trx/s. If you set rocksdb_rate_limiter_bytes_per_sec too low, like at 1MB/s, compaction cannot keep up and processing has to stall for some time. You should allocate enough bandwidth for compaction to avoid these stalls.

Long term stability

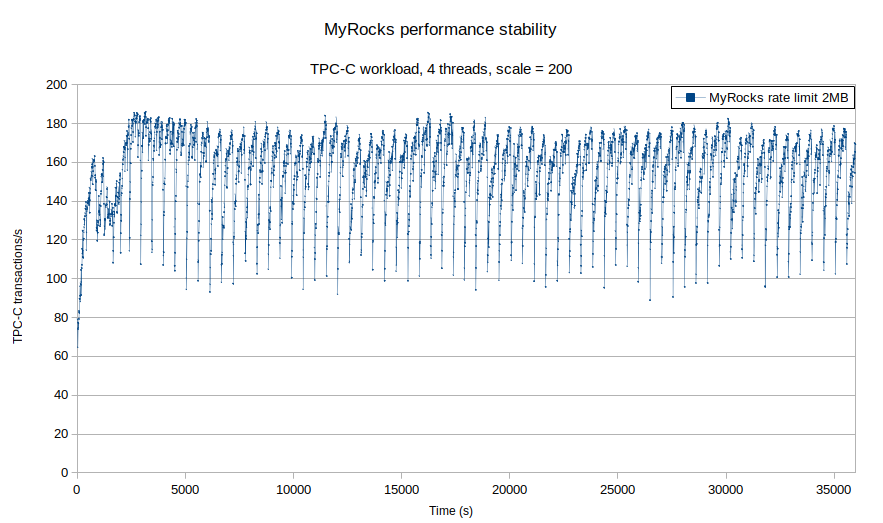

Over time, as data accumulates in the RocksDB LSM tree, performance can degrade. Using the 2 MB/s rate limiter, I pushed the runtime to 10 hours and observed very little degradation as shown in the following figure.

There are of course many compaction events but the performance baseline remains stable.

MyRocks Vs InnoDB

Now, how does this workload perform on InnoDB? InnoDB is more IO bound than MyRocks, essentially the 20GB dataset is large for the 3GB buffer pool.

The compaction event diminishes MyRocks performance but even then, the transaction rate stays well above the InnoDB one. Over the course of one hour, InnoDB executed 125k transactions while MyRocks achieved in excess of 575k transactions. Even if InnoDB uses compression (CMP8k), the performance level is still much lower.

Conclusion

I hope this post has raised your interest in the MyRocks storage engine. If you are paying too much for cloud-based storage and IOPs, make sure you evaluate MyRocks as it has super compression capabilities and is IO efficient.

Note: all the raw results, scripts, and configuration files used for this post can be found on Github.