Amazon Redshift is a fast, fully-managed, petabyte scale data warehouse that provides the flexibility to use provisioned or serverless compute for your analytical workloads. Using Amazon Redshift Serverless and Query Editor v2, you can load and query large datasets in just a few clicks and pay only for what you use. The decoupled compute and storage architecture of Amazon Redshift enables you to build highly scalable, resilient, and cost-effective workloads. Many customers migrate their data warehousing workloads to Amazon Redshift and benefit from the rich capabilities it offers. The following are just some of the notable capabilities:

- Amazon Redshift seamlessly integrates with broader analytics services on AWS. This enables you to choose the right tool for the right job. Modern analytics is much wider than SQL-based data warehousing. Amazon Redshift lets you build lake house architectures and then perform any kind of analytics, such as interactive analytics, operational analytics, big data processing, visual data preparation, predictive analytics, machine learning (ML), and more.

- You don’t need to worry about workloads, such as ETL, dashboards, ad-hoc queries, and so on, interfering with each other. You can isolate workloads using data sharing, while using the same underlying datasets.

- When users run many queries at peak times, compute seamlessly scales within seconds to provide consistent performance at high concurrency. You get one hour of free concurrency scaling capacity for 24 hours of usage. This free credit meets the concurrency demand of 97% of the Amazon Redshift customer base.

- Amazon Redshift is easy-to-use with self-tuning and self-optimizing capabilities. You can get faster insights without spending valuable time managing your data warehouse.

- Fault Tolerance is inbuilt. All of the data written to Amazon Redshift is automatically and continuously replicated to Amazon Simple Storage Service (Amazon S3). Any hardware failures are automatically replaced.

- Amazon Redshift is simple to interact with. You can access data with traditional, cloud-native, containerized, and serverless web services-based or event-driven applications and so on.

- Redshift ML makes it easy for data scientists to create, train, and deploy ML models using familiar SQL. They can also run predictions using SQL.

- Amazon Redshift provides comprehensive data security at no extra cost. You can set up end-to-end data encryption, configure firewall rules, define granular row and column level security controls on sensitive data, and so on.

- Amazon Redshift integrates seamlessly with other AWS services and third-party tools. You can move, transform, load, and query large datasets quickly and reliably.

In this post, we provide a walkthrough for migrating a data warehouse from Google BigQuery to Amazon Redshift using AWS Schema Conversion Tool (AWS SCT) and AWS SCT data extraction agents. AWS SCT is a service that makes heterogeneous database migrations predictable by automatically converting the majority of the database code and storage objects to a format that is compatible with the target database. Any objects that can’t be automatically converted are clearly marked so that they can be manually converted to complete the migration. Furthermore, AWS SCT can scan your application code for embedded SQL statements and convert them.

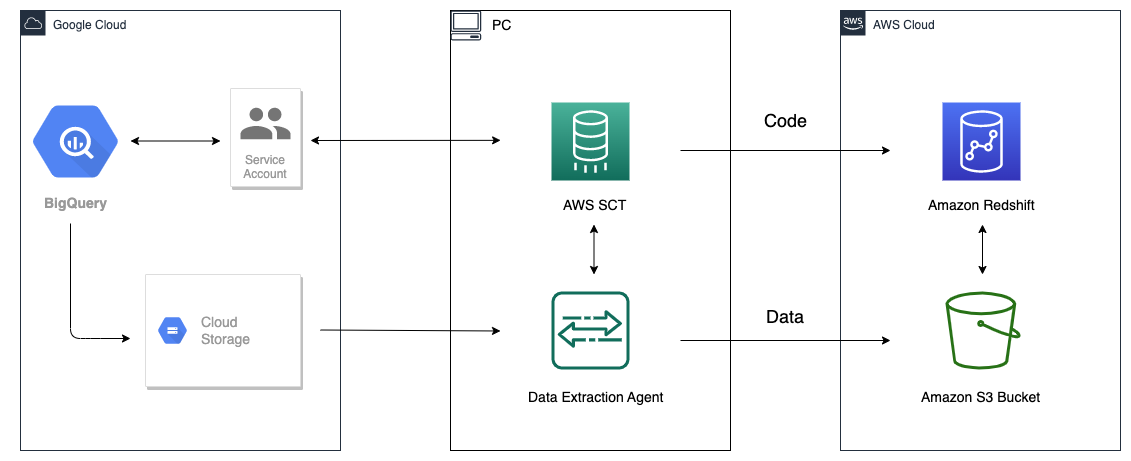

Solution overview

AWS SCT uses a service account to connect to your BigQuery project. First, we create an Amazon Redshift database into which BigQuery data is migrated. Next, we create an S3 bucket. Then, we use AWS SCT to convert BigQuery schemas and apply them to Amazon Redshift. Finally, to migrate data, we use AWS SCT data extraction agents, which extract data from BigQuery, upload it into the S3 bucket, and then copy to Amazon Redshift.

Prerequisites

Before starting this walkthrough, you must have the following prerequisites:

- A workstation with AWS SCT, Amazon Corretto 11, and Amazon Redshift drivers.

- You can use an Amazon Elastic Compute Cloud (Amazon EC2) instance or your local desktop as a workstation. In this walkthrough, we’re using Amazon EC2 Windows instance. To create it, use this guide.

- To download and install AWS SCT on the EC2 instance that you previously created, use this guide.

- Download the Amazon Redshift JDBC driver from this location.

- Download and install Amazon Corretto 11.

- A GCP service account that AWS SCT can use to connect to your source BigQuery project.

- Grant BigQuery Admin and Storage Admin roles to the service account.

- Copy the Service account key file, which was created in the Google cloud management console, to the EC2 instance that has AWS SCT.

- Create a Cloud Storage bucket in GCP to store your source data during migration.

This walkthrough covers the following steps:

- Create an Amazon Redshift Serverless Workgroup and Namespace

- Create the AWS S3 Bucket and Folder

- Convert and apply BigQuery Schema to Amazon Redshift using AWS SCT

- Connecting to the Google BigQuery Source

- Connect to the Amazon Redshift Target

- Convert BigQuery schema to an Amazon Redshift

- Analyze the assessment report and address the action items

- Apply converted schema to target Amazon Redshift

- Migrate data using AWS SCT data extraction agents

- Generating Trust and Key Stores (Optional)

- Install and start data extraction agent

- Register data extraction agent

- Add virtual partitions for large tables (Optional)

- Create a local migration task

- Start the Local Data Migration Task

- View Data in Amazon Redshift

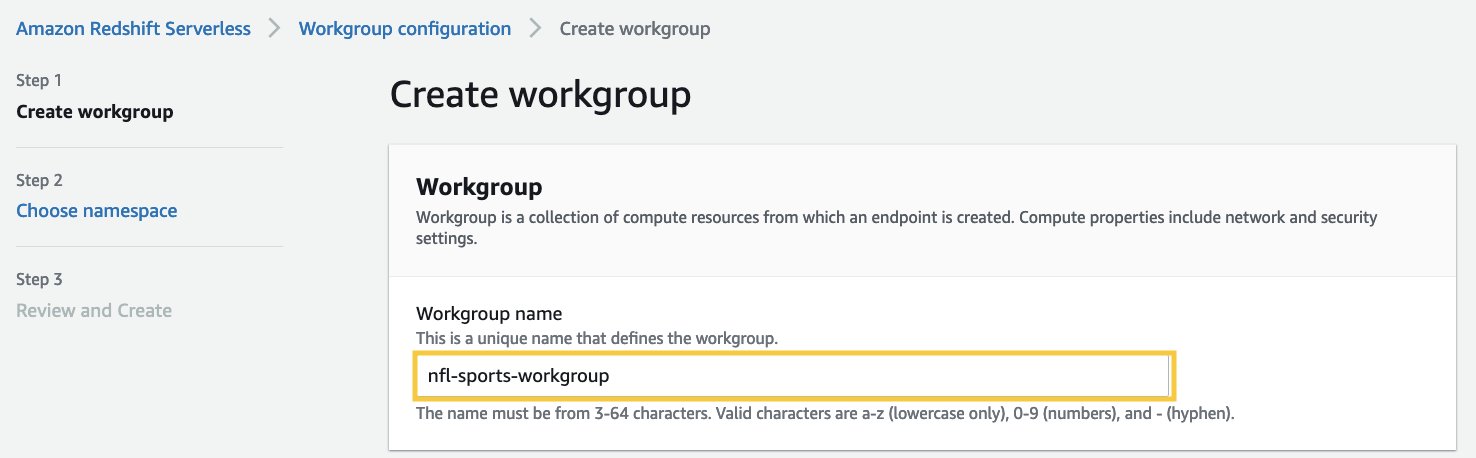

Create an Amazon Redshift Serverless Workgroup and Namespace

In this step, we create an Amazon Redshift Serverless workgroup and namespace. Workgroup is a collection of compute resources and namespace is a collection of database objects and users. To isolate workloads and manage different resources in Amazon Redshift Serverless, you can create namespaces and workgroups and manage storage and compute resources separately.

Follow these steps to create Amazon Redshift Serverless workgroup and namespace:

- Navigate to the Amazon Redshift console.

- In the upper right, choose the AWS Region that you want to use.

- Expand the Amazon Redshift pane on the left and choose Redshift Serverless.

- Choose Create Workgroup.

- For Workgroup name, enter a name that describes the compute resources.

- Verify that the VPC is the same as the VPC as the EC2 instance with AWS SCT.

- Choose Next.

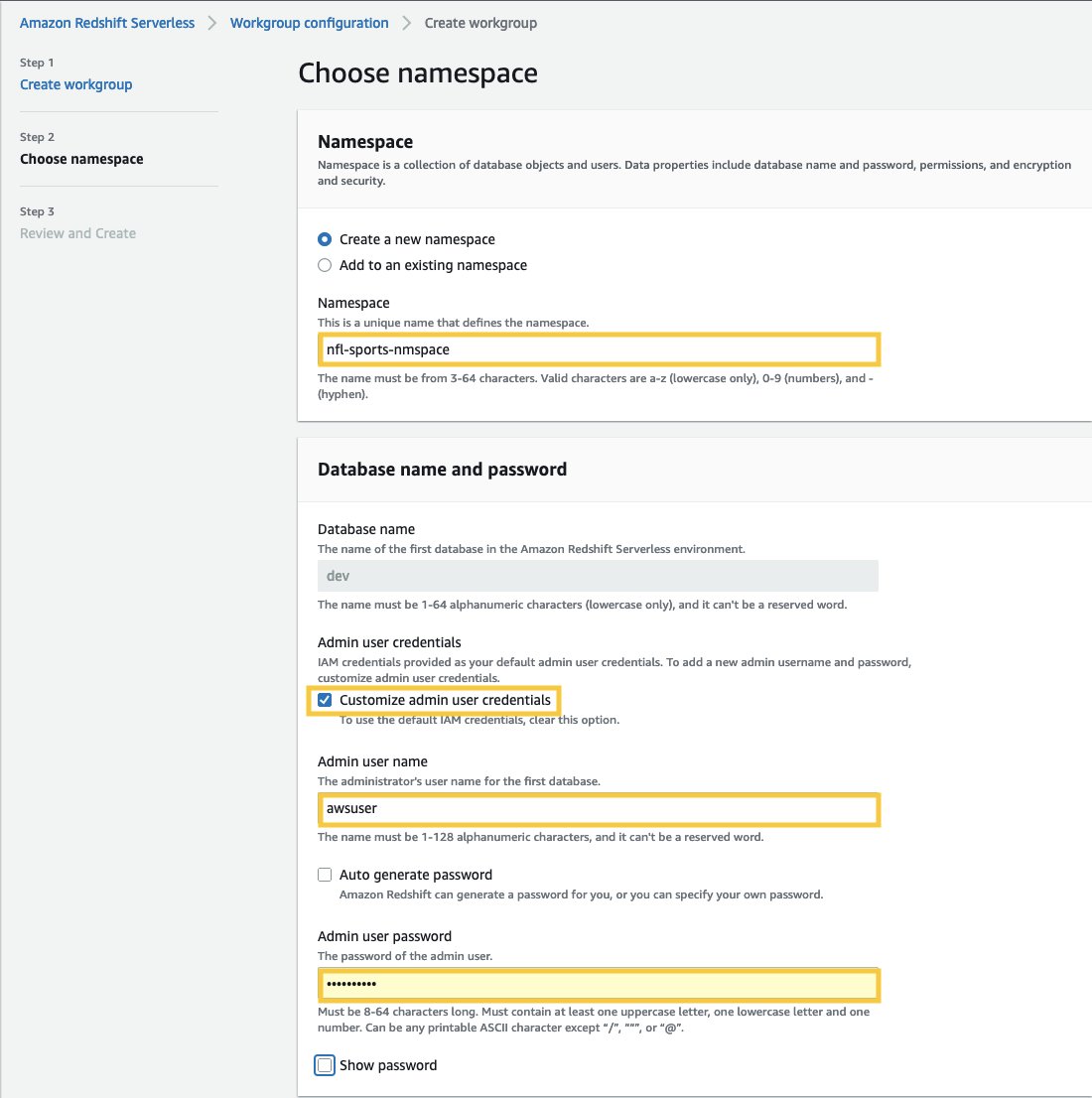

- For Namespace name, enter a name that describes your dataset.

- In Database name and password section, select the checkbox Customize admin user credentials.

- For Admin user name, enter a username of your choice, for example awsuser.

- For Admin user password: enter a password of your choice, for example MyRedShiftPW2022.

- Choose Next. Note that data in Amazon Redshift Serverless namespace is encrypted by default.

- In the Review and Create page, choose Create.

- Create an AWS Identity and Access Management (IAM) role and set it as the default on your namespace, as described in the following. Note that there can only be one default IAM role.

- Navigate to the Amazon Redshift Serverless Dashboard.

- Under Namespaces / Workgroups, choose the namespace that you just created.

- Navigate toSecurity and encryption.

- Under Permissions, choose Manage IAM roles.

- Navigate to Manage IAM roles. Then, choose the Manage IAM roles drop-down and choose Create IAM role.

- Under Specify an Amazon S3 bucket for the IAM role to access, choose one of the following methods:

- Choose No additional Amazon S3 bucket to allow the created IAM role to access only the S3 buckets with a name starting with redshift.

- Choose Any Amazon S3 bucket to allow the created IAM role to access all of the S3 buckets.

- Choose Specific Amazon S3 buckets to specify one or more S3 buckets for the created IAM role to access. Then choose one or more S3 buckets from the table.

- Choose Create IAM role as default. Amazon Redshift automatically creates and sets the IAM role as default.

- Capture the Endpoint for the Amazon Redshift Serverless workgroup that you just created.

- Navigate to the Amazon Redshift Serverless Dashboard.

- Under Namespaces / Workgroups, choose the workgroup that you just created.

- Under General information, copy the Endpoint.

Create the S3 bucket and folder

During the data migration process, AWS SCT uses Amazon S3 as a staging area for the extracted data. Follow these steps to create the S3 bucket:

- Navigate to the Amazon S3 console

- Choose Create bucket. The Create bucket wizard opens.

- For Bucket name, enter a unique DNS-compliant name for your bucket (e.g., uniquename-bq-rs). See rules for bucket naming when choosing a name.

- For AWS Region, choose the region in which you created the Amazon Redshift Serverless workgroup.

- Select Create Bucket.

- In the Amazon S3 console, navigate to the S3 bucket that you just created (e.g., uniquename-bq-rs).

- Choose “Create folder” to create a new folder.

- For Folder name, enter incoming and choose Create Folder.

Convert and apply BigQuery Schema to Amazon Redshift using AWS SCT

To convert BigQuery schema to the Amazon Redshift format, we use AWS SCT. Start by logging in to the EC2 instance that we created previously, and then launch AWS SCT.

Follow these steps using AWS SCT:

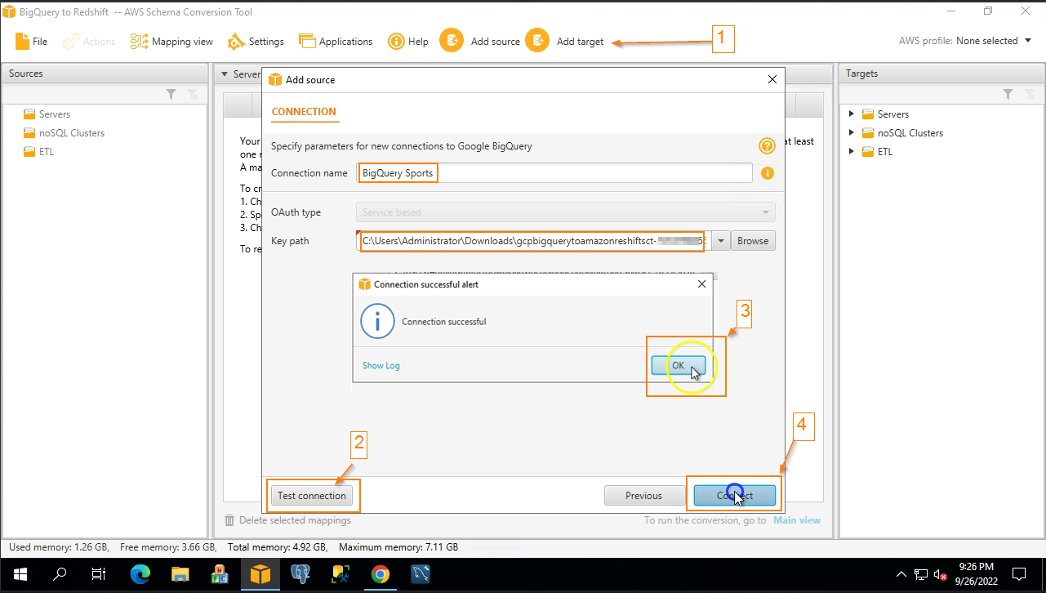

Connect to the BigQuery Source

- From the File Menu choose Create New Project.

- Choose a location to store your project files and data.

- Provide a meaningful but memorable name for your project, such as BigQuery to Amazon Redshift.

- To connect to the BigQuery source data warehouse, choose Add source from the main menu.

- Choose BigQuery and choose Next. The Add source dialog box appears.

- For Connection name, enter a name to describe BigQuery connection. AWS SCT displays this name in the tree in the left panel.

- For Key path, provide the path of the service account key file that was previously created in the Google cloud management console.

- Choose Test Connection to verify that AWS SCT can connect to your source BigQuery project.

- Once the connection is successfully validated, choose Connect.

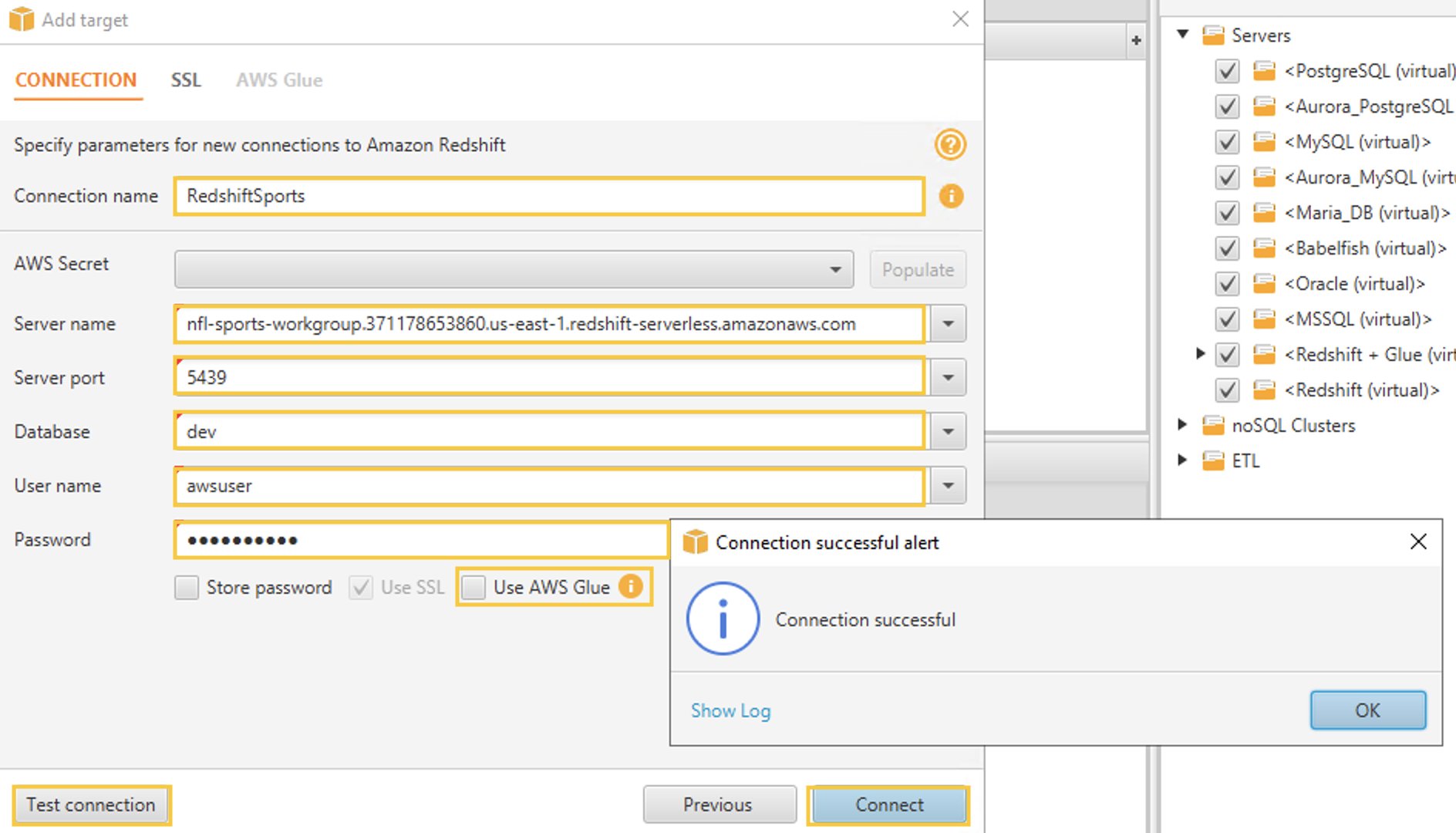

Connect to the Amazon Redshift Target

Follow these steps to connect to Amazon Redshift:

- In AWS SCT, choose Add Target from the main menu.

- Choose Amazon Redshift, then choose Next. The Add Target dialog box appears.

- For Connection name, enter a name to describe the Amazon Redshift connection. AWS SCT displays this name in the tree in the right panel.

- For Server name, enter the Amazon Redshift Serverless workgroup endpoint captured previously.

- For Server port, enter 5439.

- For Database, enter dev.

- For User name, enter the username chosen when creating the Amazon Redshift Serverless workgroup.

- For Password, enter the password chosen when creating Amazon Redshift Serverless workgroup.

- Uncheck the “Use AWS Glue” box.

- Choose Test Connection to verify that AWS SCT can connect to your target Amazon Redshift workgroup.

- Choose Connect to connect to the Amazon Redshift target.

Note that alternatively you can use connection values that are stored in AWS Secrets Manager.

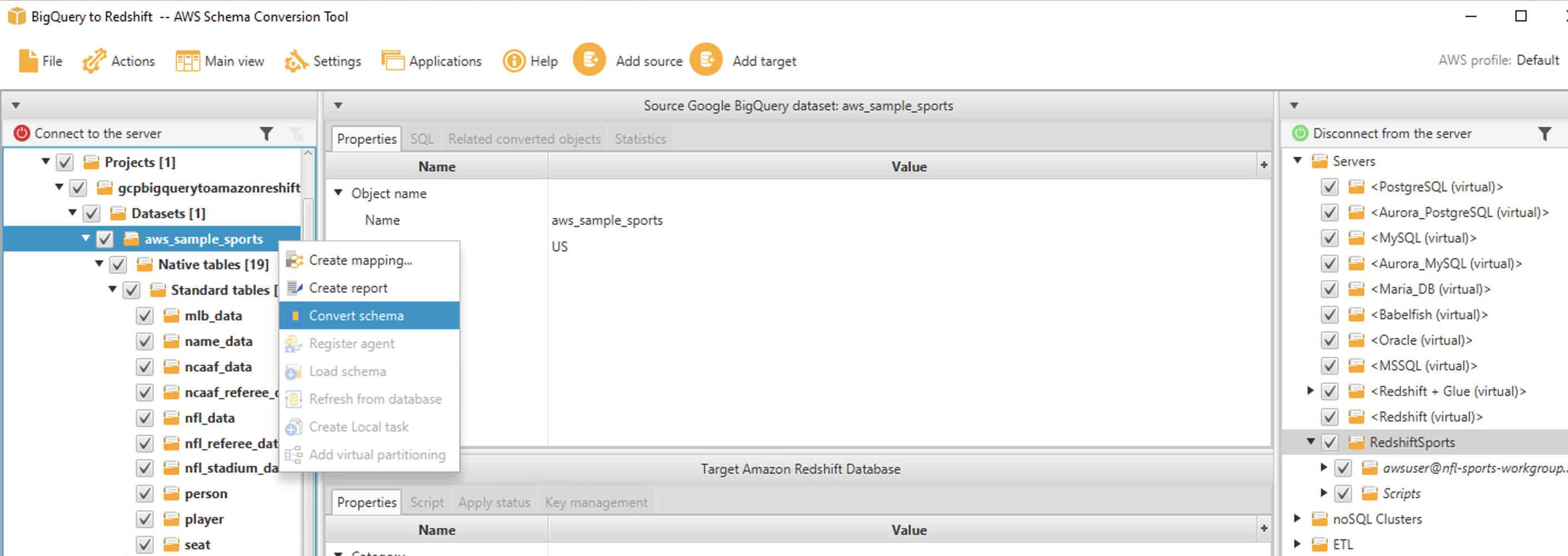

Convert BigQuery schema to an Amazon Redshift

After the source and target connections are successfully made, you see the source BigQuery object tree on the left pane and target Amazon Redshift object tree on the right pane.

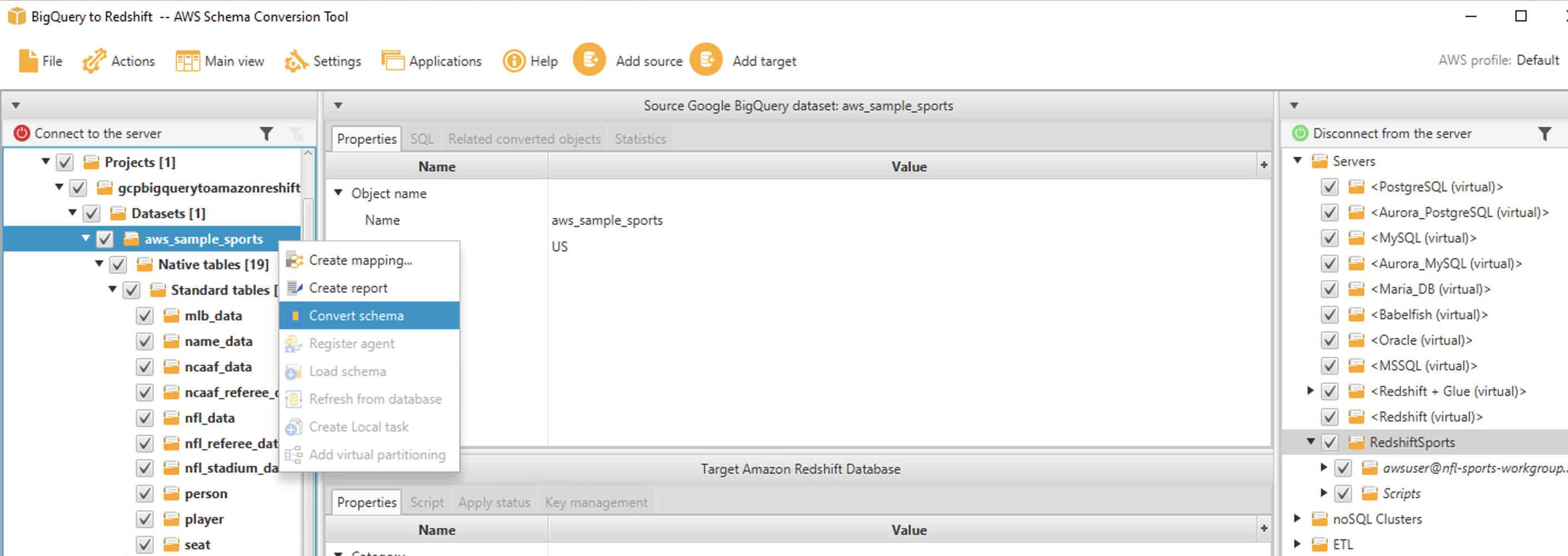

Follow these steps to convert BigQuery schema to the Amazon Redshift format:

- On the left pane, right-click on the schema that you want to convert.

- Choose Convert Schema.

- A dialog box appears with a question, The objects might already exist in the target database. Replace?. Choose Yes.

Once the conversion is complete, you see a new schema created on the Amazon Redshift pane (right pane) with the same name as your BigQuery schema.

The sample schema that we used has 16 tables, 3 views, and 3 procedures. You can see these objects in the Amazon Redshift format in the right pane. AWS SCT converts all of the BigQuery code and data objects to the Amazon Redshift format. Furthermore, you can use AWS SCT to convert external SQL scripts, application code, or additional files with embedded SQL.

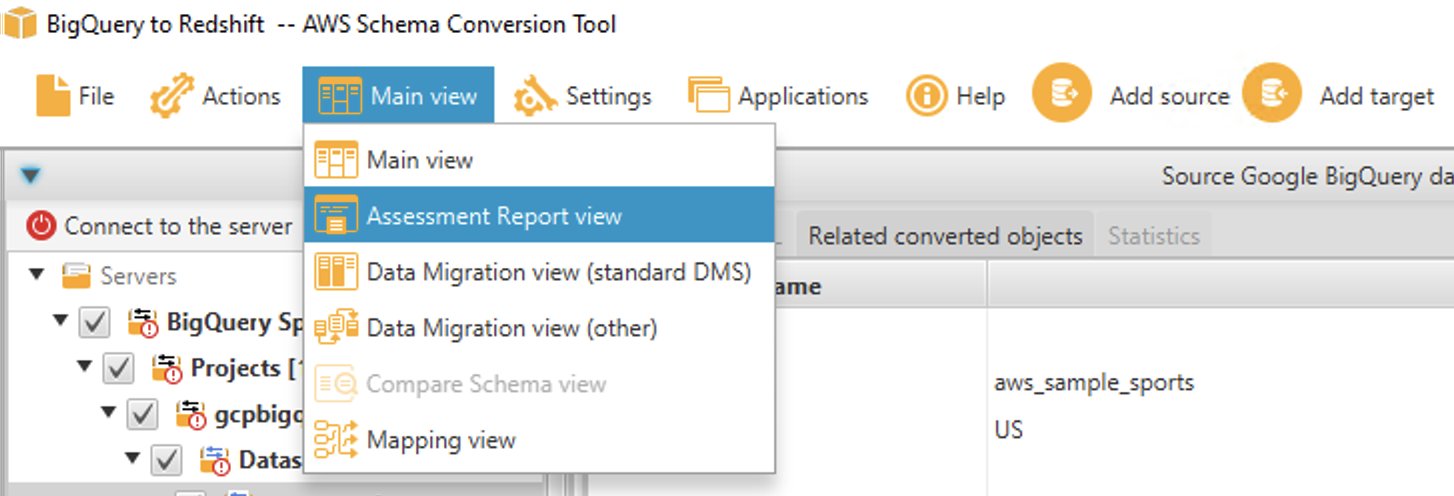

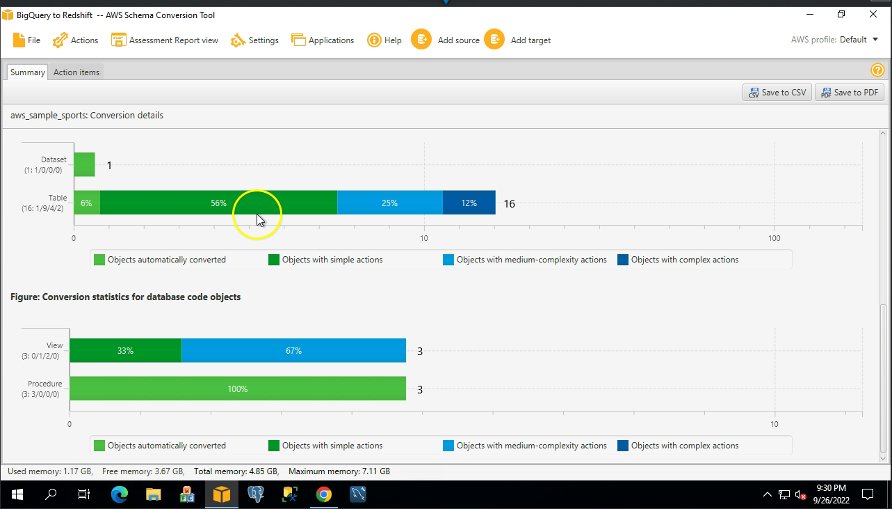

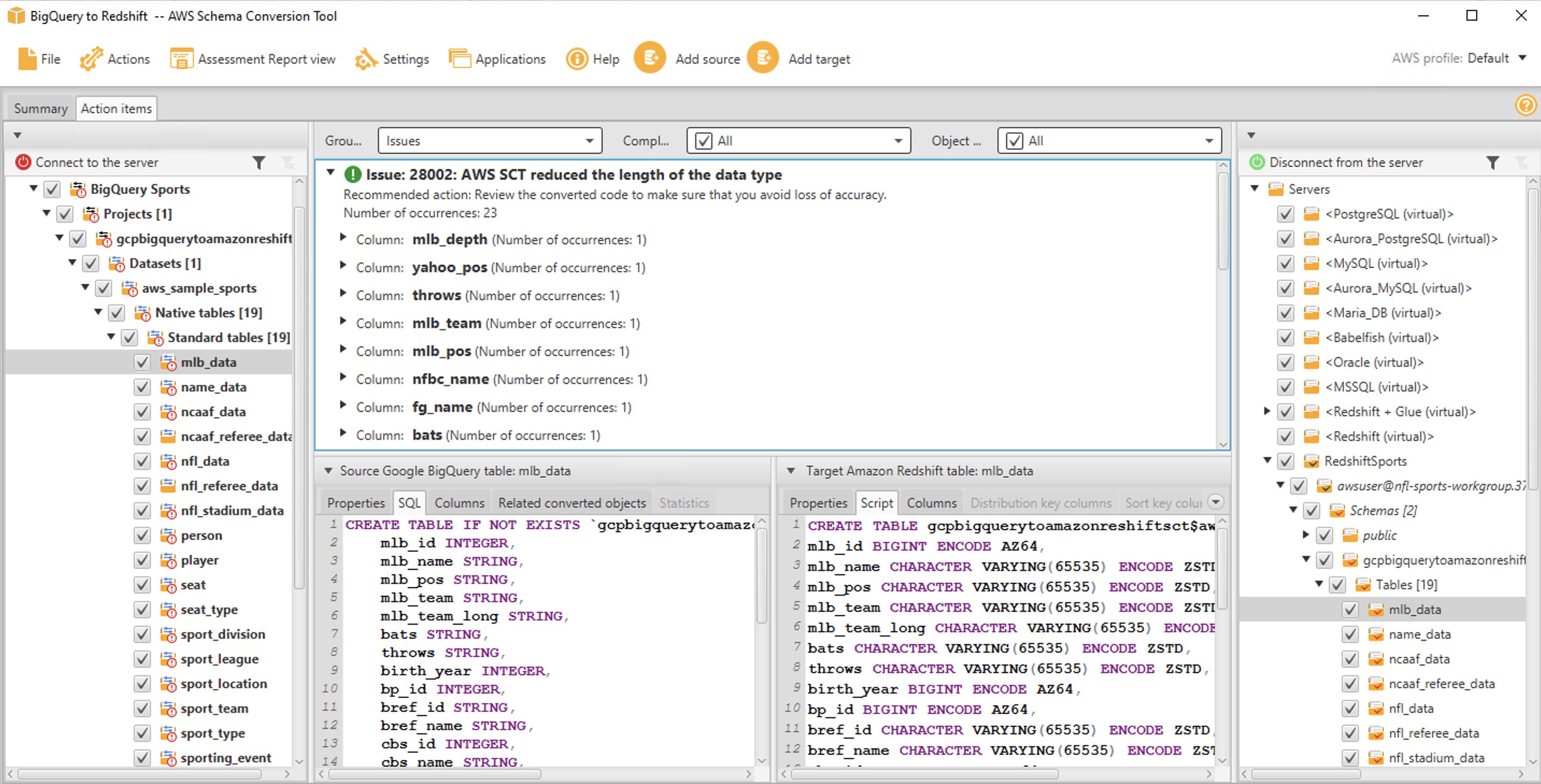

Analyze the assessment report and address the action items

AWS SCT creates an assessment report to assess the migration complexity. AWS SCT can convert the majority of code and database objects. However, some of the objects may require manual conversion. AWS SCT highlights these objects in blue in the conversion statistics diagram and creates action items with a complexity attached to them.

To view the assessment report, switch from the Main view to the Assessment Report view as follows:

The Summary tab shows objects that were converted automatically, and objects that weren’t converted automatically. Green represents automatically converted or with simple action items. Blue represents medium and complex action items that require manual intervention.

The Action Items tab shows the recommended actions for each conversion issue. If you select an action item from the list, AWS SCT highlights the object to which the action item applies.

The report also contains recommendations for how to manually convert the schema item. For example, after the assessment runs, detailed reports for the database/schema show you the effort required to design and implement the recommendations for converting Action items. For more information about deciding how to handle manual conversions, see Handling manual conversions in AWS SCT. Amazon Redshift takes some actions automatically while converting the schema to Amazon Redshift. Objects with these actions are marked with a red warning sign.

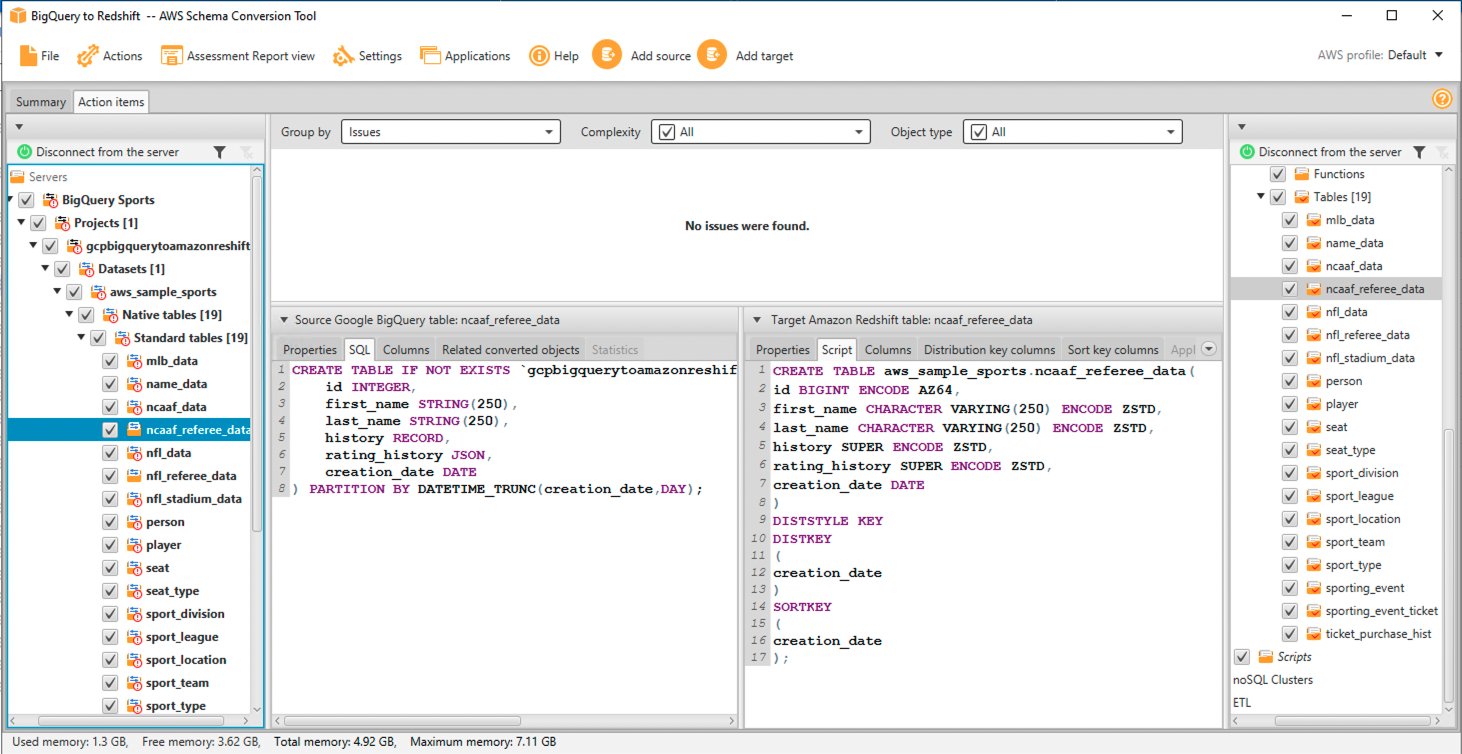

You can evaluate and inspect the individual object DDL by selecting it from the right pane, and you can also edit it as needed. In the following example, AWS SCT modifies the RECORD and JSON datatype columns in BigQuery table ncaaf_referee_data to the SUPER datatype in Amazon Redshift. The partition key in the ncaaf_referee_data table is converted to the distribution key and sort key in Amazon Redshift.

Apply converted schema to target Amazon Redshift

To apply the converted schema to Amazon Redshift, select the converted schema in the right pane, right-click, and then choose Apply to database.

Migrate data from BigQuery to Amazon Redshift using AWS SCT data extraction agents

AWS SCT extraction agents extract data from your source database and migrate it to the AWS Cloud. In this walkthrough, we show how to configure AWS SCT extraction agents to extract data from BigQuery and migrate to Amazon Redshift.

First, install AWS SCT extraction agent on the same Windows instance that has AWS SCT installed. For better performance, we recommend that you use a separate Linux instance to install extraction agents if possible. For big datasets, you can use several data extraction agents to increase the data migration speed.

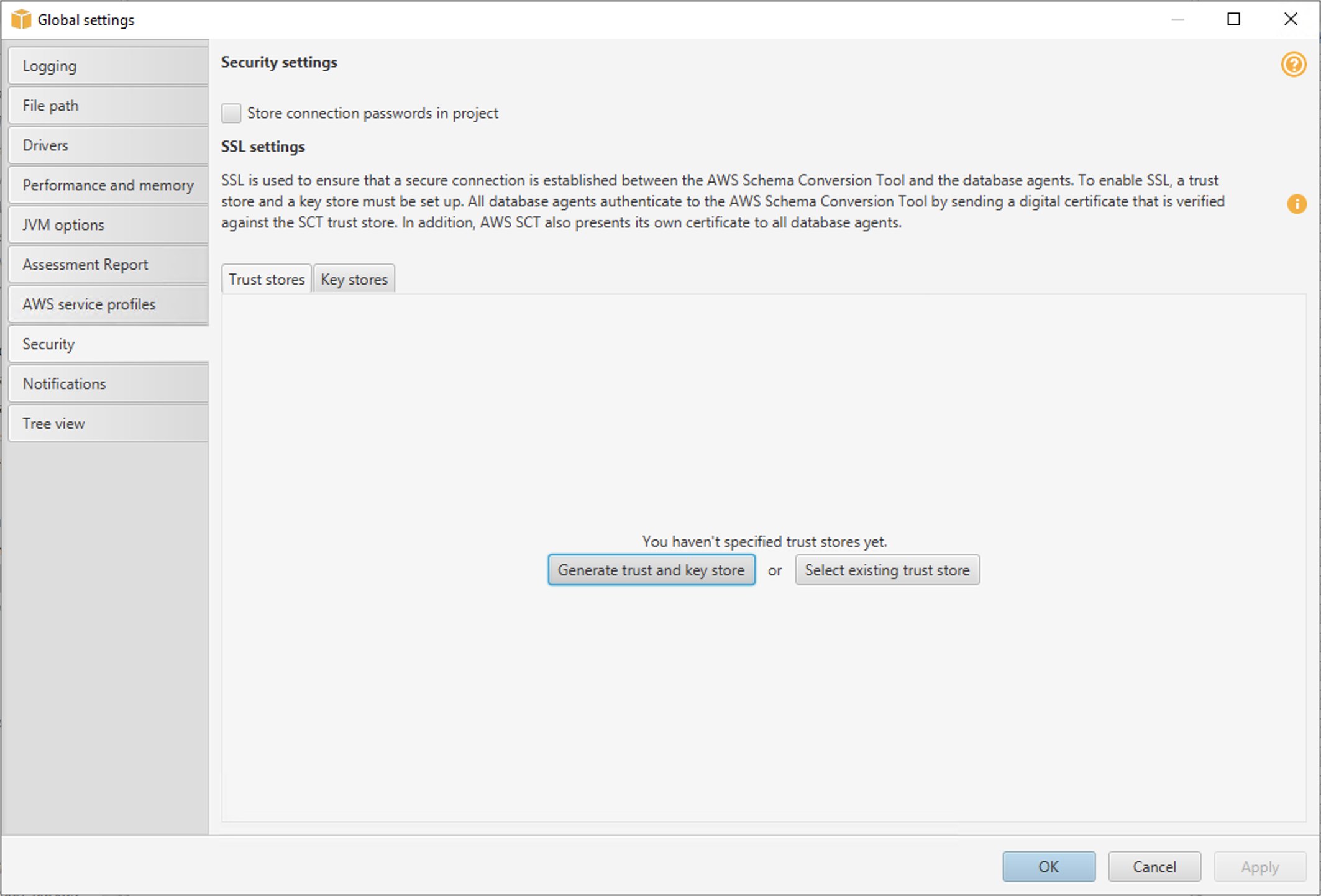

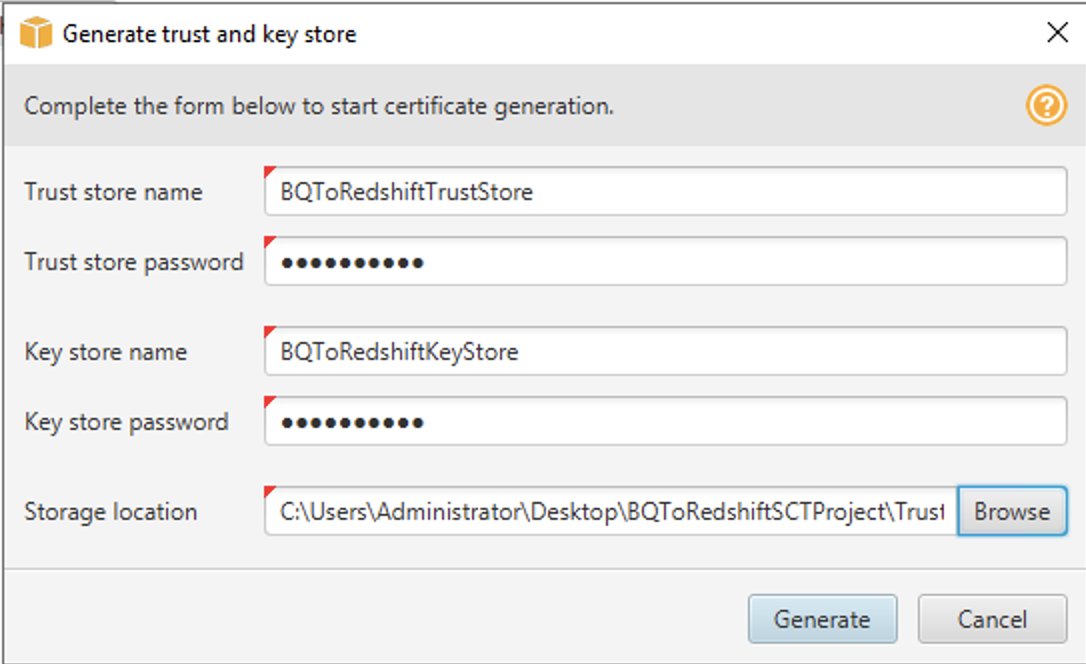

Generating trust and key stores (optional)

You can use Secure Socket Layer (SSL) encrypted communication with AWS SCT data extractors. When you use SSL, all of the data passed between the applications remains private and integral. To use SSL communication, you must generate trust and key stores using AWS SCT. You can skip this step if you don’t want to use SSL. We recommend using SSL for production workloads.

Follow these steps to generate trust and key stores:

- In AWS SCT, navigate to Settings ? Global Settings ? Security.

- Choose Generate trust and key store.

- Enter the name and password for trust and key stores and choose a location where you would like to store them.

- Choose Generate.

Install and configure Data Extraction Agent

In the installation package for AWS SCT, you find a sub-folder agent (\aws-schema-conversion-tool-1.0.latest.zip\agents). Locate and install the executable file with a name like aws-schema-conversion-tool-extractor-xxxxxxxx.msi.

In the installation process, follow these steps to configure AWS SCT Data Extractor:

- For Listening port, enter the port number on which the agent listens. It is 8192 by default.

- For Add a source vendor, enter no, as you don’t need drivers to connect to BigQuery.

- For Add the Amazon Redshift driver, enter YES.

- For Enter Redshift JDBC driver file or files, enter the location where you downloaded Amazon Redshift JDBC drivers.

- For Working folder, enter the path where the AWS SCT data extraction agent will store the extracted data. The working folder can be on a different computer from the agent, and a single working folder can be shared by multiple agents on different computers.

- For Enable SSL communication, enter yes. Choose No here if you don’t want to use SSL.

- For Key store, enter the storage location chosen when creating the trust and key store.

- For Key store password, enter the password for the key store.

- For Enable client SSL authentication, enter yes.

- For Trust store, enter the storage location chosen when creating the trust and key store.

- For Trust store password, enter the password for the trust store.

Starting Data Extraction Agent(s)

Use the following procedure to start extraction agents. Repeat this procedure on each computer that has an extraction agent installed.

Extraction agents act as listeners. When you start an agent with this procedure, the agent starts listening for instructions. You send the agents instructions to extract data from your data warehouse in a later section.

To start the extraction agent, navigate to the AWS SCT Data Extractor Agent directory. For example, in Microsoft Windows, double-click C:\Program Files\AWS SCT Data Extractor Agent\StartAgent.bat.

- On the computer that has the extraction agent installed, from a command prompt or terminal window, run the command listed following your operating system.

- To check the status of the agent, run the same command but replace start with status.

- To stop an agent, run the same command but replace start with stop.

- To restart an agent, run the same RestartAgent.bat file.

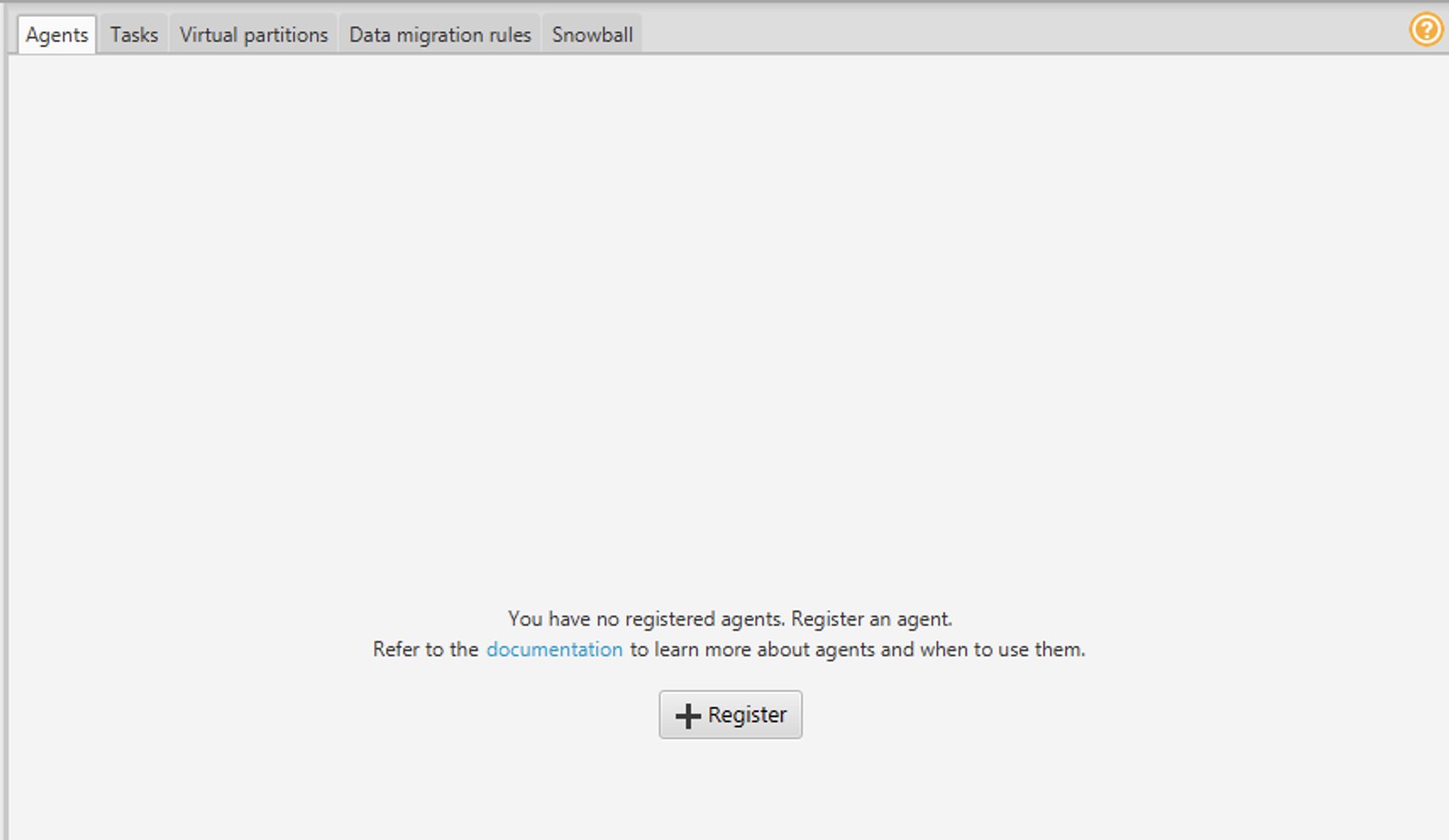

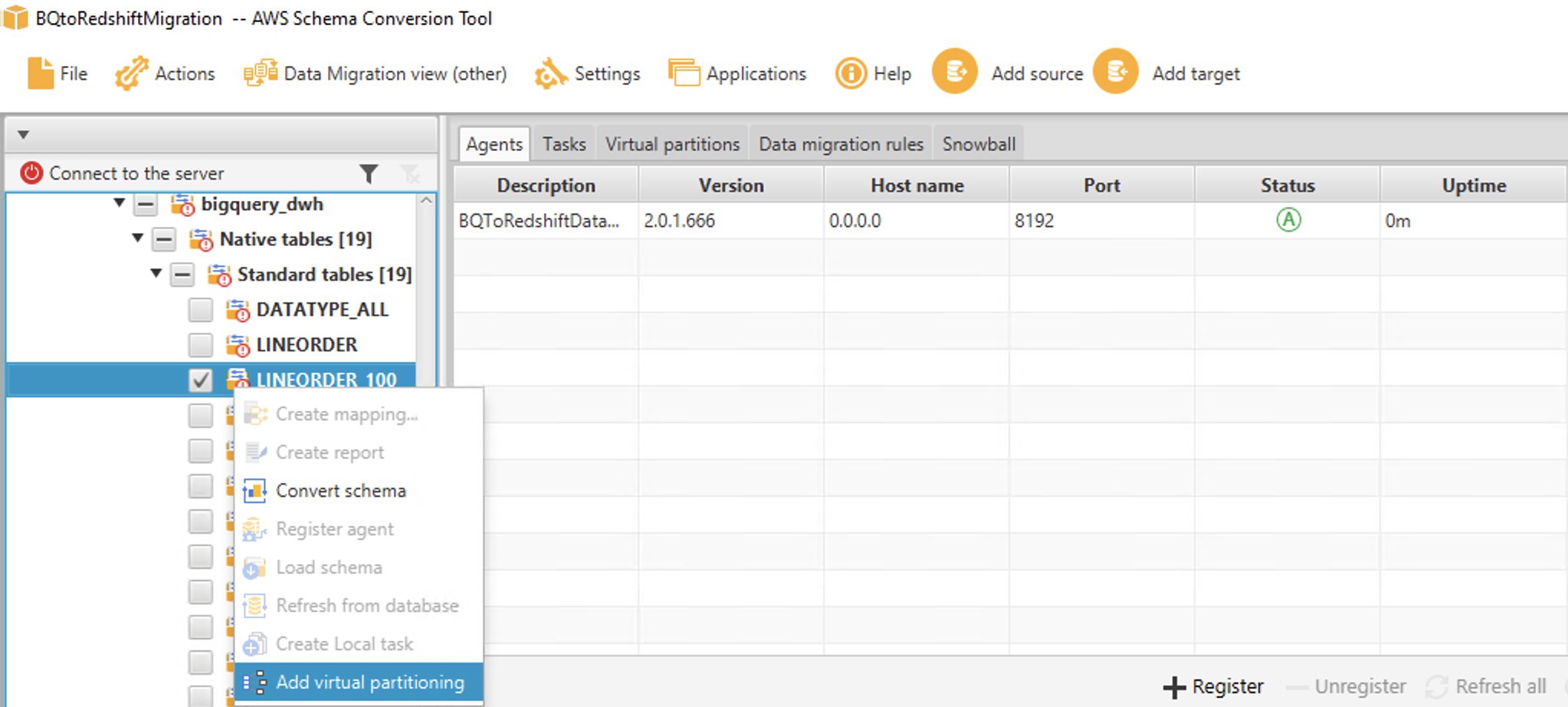

Register the Data Extraction Agent

Follow these steps to register the Data Extraction Agent:

- In AWS SCT, change the view to Data Migration view (other) and choose + Register.

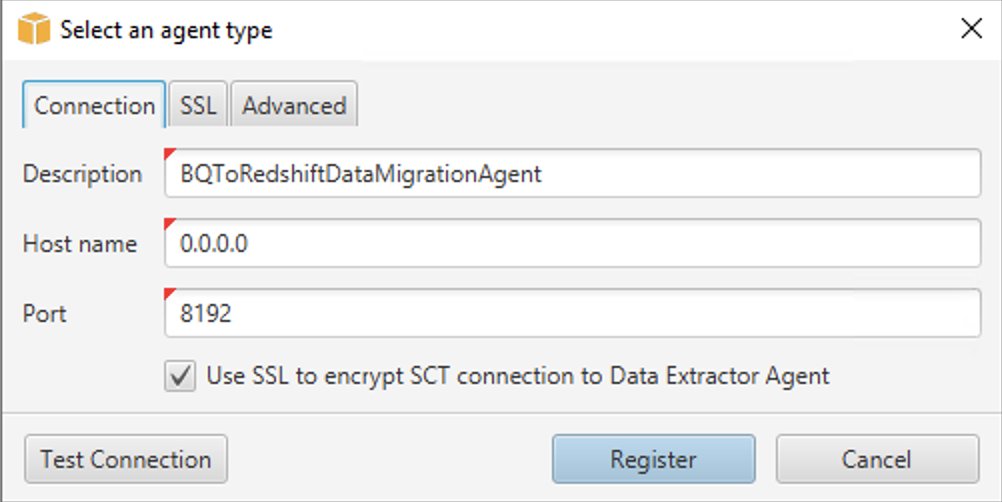

- In the connection tab:

- For Description, enter a name to identify the Data Extraction Agent.

- For Host name, if you installed the Data Extraction Agent on the same workstation as AWS SCT, enter 0.0.0.0 to indicate local host. Otherwise, enter the host name of the machine on which the AWS SCT Data Extraction Agent is installed. It’s recommended to install the Data Extraction Agents on Linux for better performance.

- For Port, enter the number entered for the Listening Port when installing the AWS SCT Data Extraction Agent.

- Select the checkbox to use SSL (if using SSL) to encrypt the AWS SCT connection to the Data Extraction Agent.

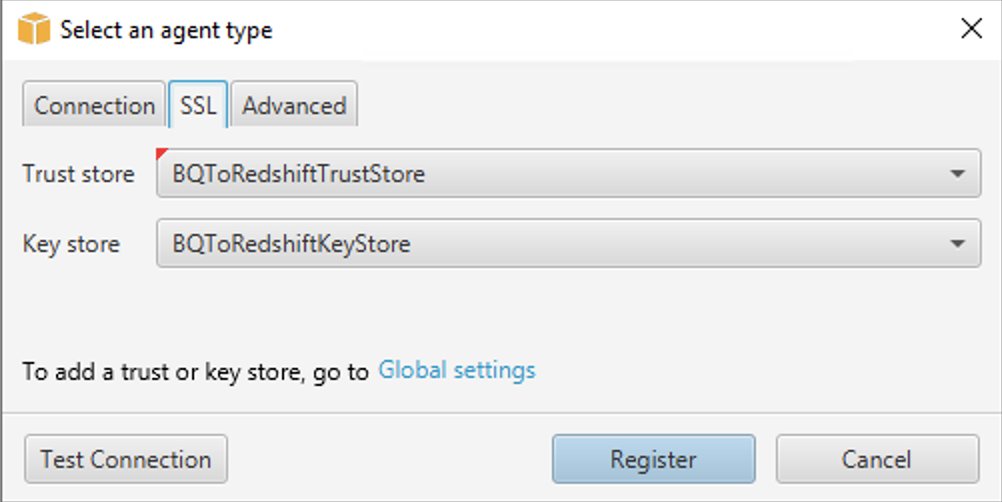

- If you’re using SSL, then in the SSL Tab:

- For Trust store, choose the trust store name created when generating Trust and Key Stores (optionally, you can skip this if SSL connectivity isn’t needed).

- For Key Store, choose the key store name created when generating Trust and Key Stores (optionally, you can skip this if SSL connectivity isn’t needed).

- Choose Test Connection.

- Once the connection is validated successfully, choose Register.

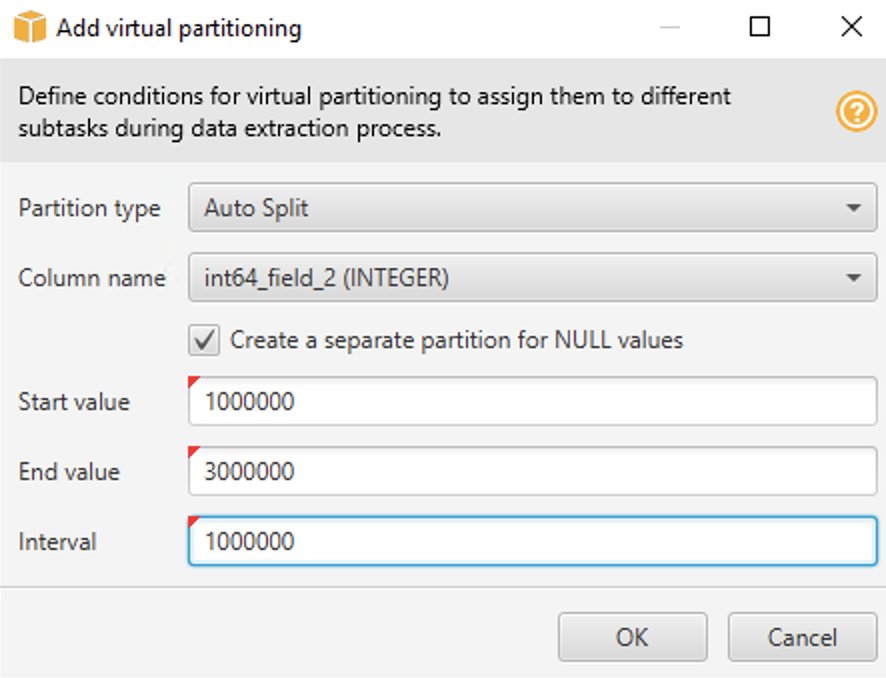

Add virtual partitions for large tables (optional)

You can use AWS SCT to create virtual partitions to optimize migration performance. When virtual partitions are created, AWS SCT extracts the data in parallel for partitions. We recommend creating virtual partitions for large tables.

Follow these steps to create virtual partitions:

- Deselect all objects on the source database view in AWS SCT.

- Choose the table for which you would like to add virtual partitioning.

- Right-click on the table, and choose Add Virtual Partitioning.

- You can use List, Range, or Auto Split partitions. To learn more about virtual partitioning, refer to Use virtual partitioning in AWS SCT. In this example, we use Auto split partitioning, which generates range partitions automatically. You would specify the start value, end value, and how big the partition should be. AWS SCT determines the partitions automatically. For a demonstration, on the Lineorder table:

- For Start Value, enter 1000000.

- For End Value, enter 3000000.

- For Interval, enter 1000000 to indicate partition size.

- Choose Ok.

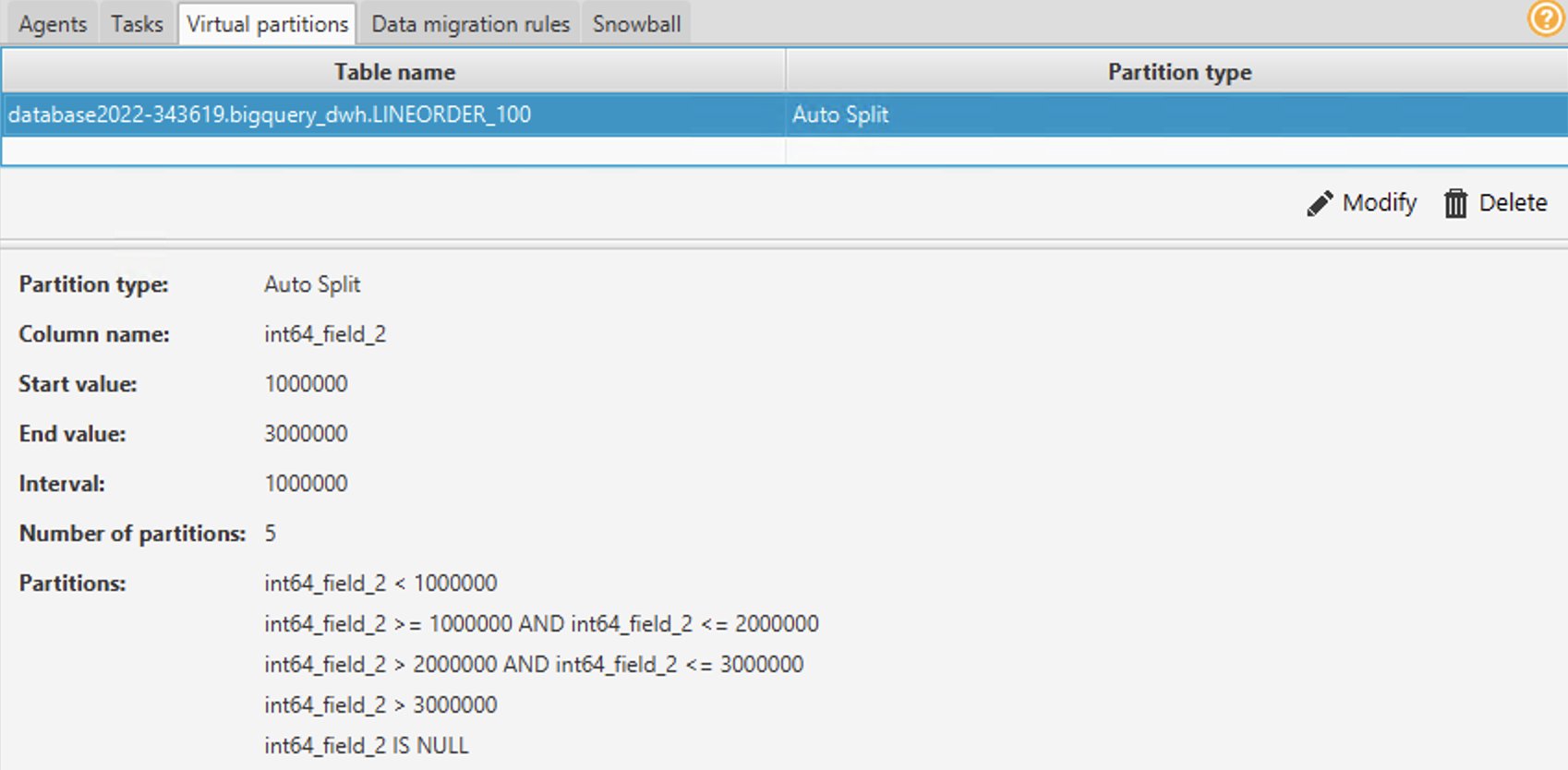

You can see the partitions automatically generated under the Virtual Partitions tab. In this example, AWS SCT automatically created the following five partitions for the field:

-

- <1000000

- >=1000000 and <=2000000

- >2000000 and <=3000000

- >3000000

- IS NULL

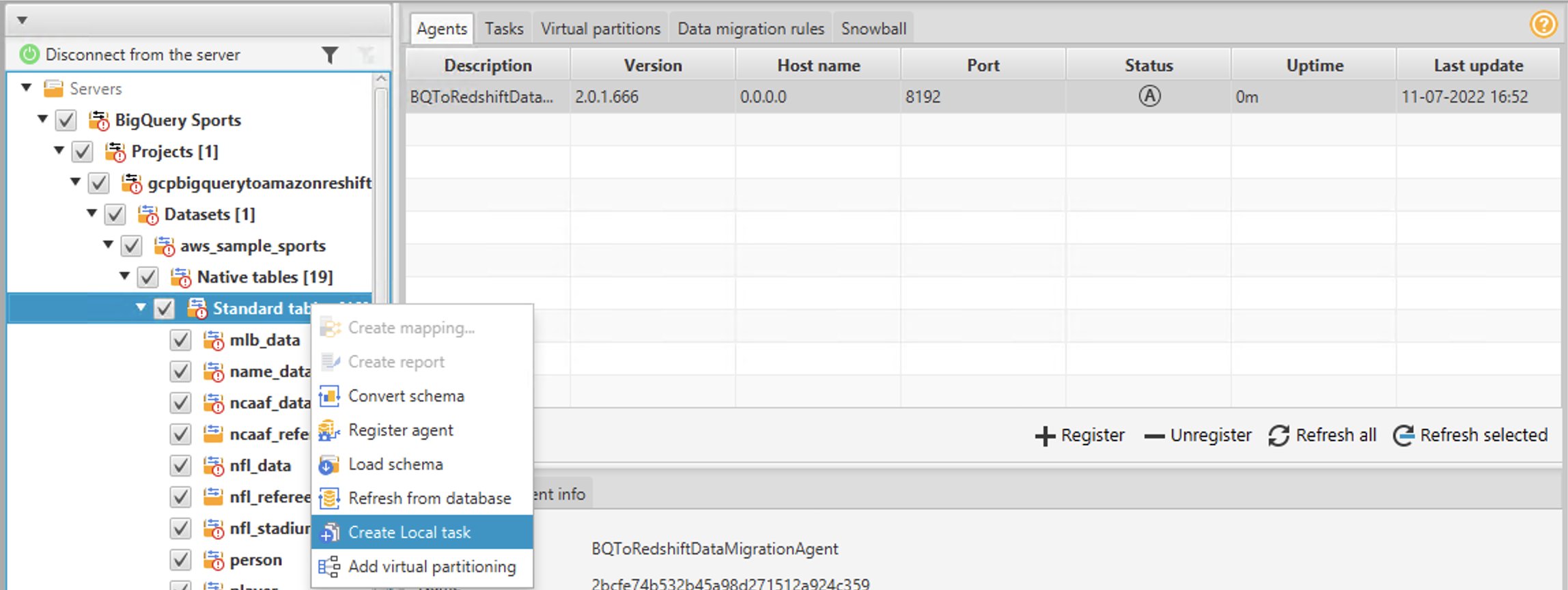

Create a local migration task

To migrate data from BigQuery to Amazon Redshift, create, run, and monitor the local migration task from AWS SCT. This step uses the data extraction agent to migrate data by creating a task.

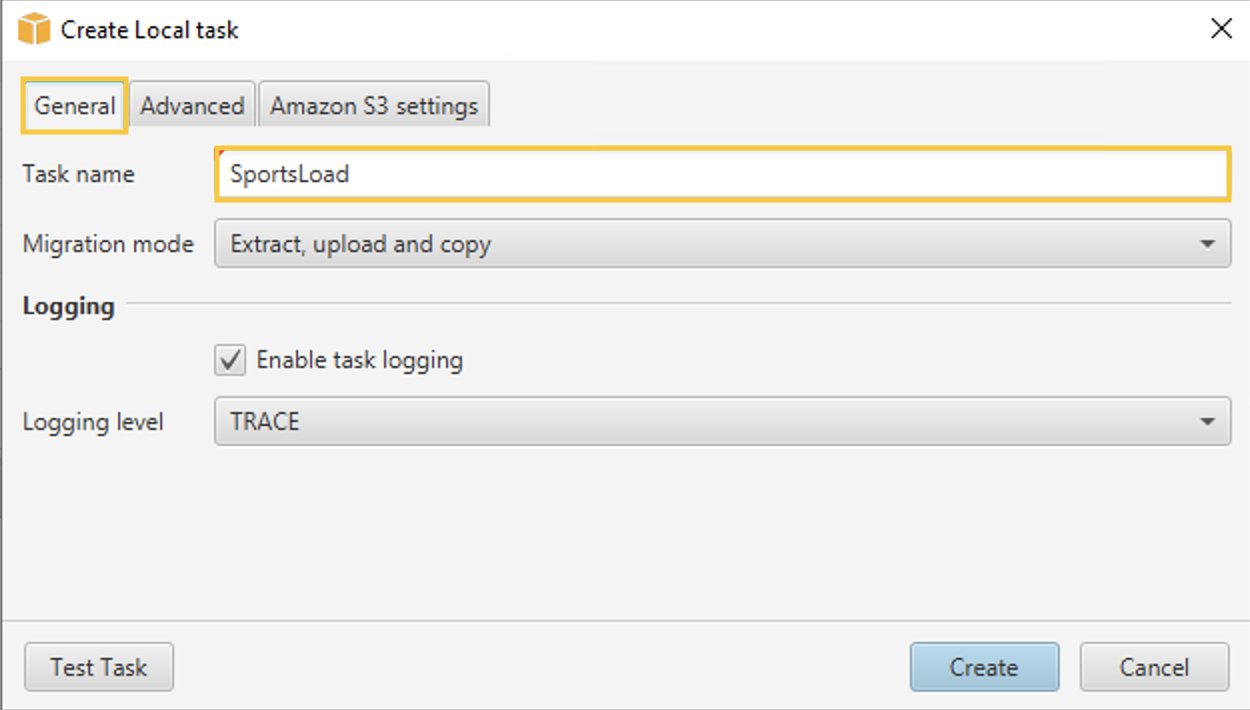

Follow these steps to create a local migration task:

- In AWS SCT, under the schema name in the left pane, right-click on Standard tables.

- Choose Create Local task.

- There are three migration modes from which you can choose:

- Extract source data and store it on a local pc/virtual machine (VM) where the agent runs.

- Extract data and upload it on an S3 bucket.

- Choose Extract upload and copy, which extracts data to an S3 bucket and then copies to Amazon Redshift.

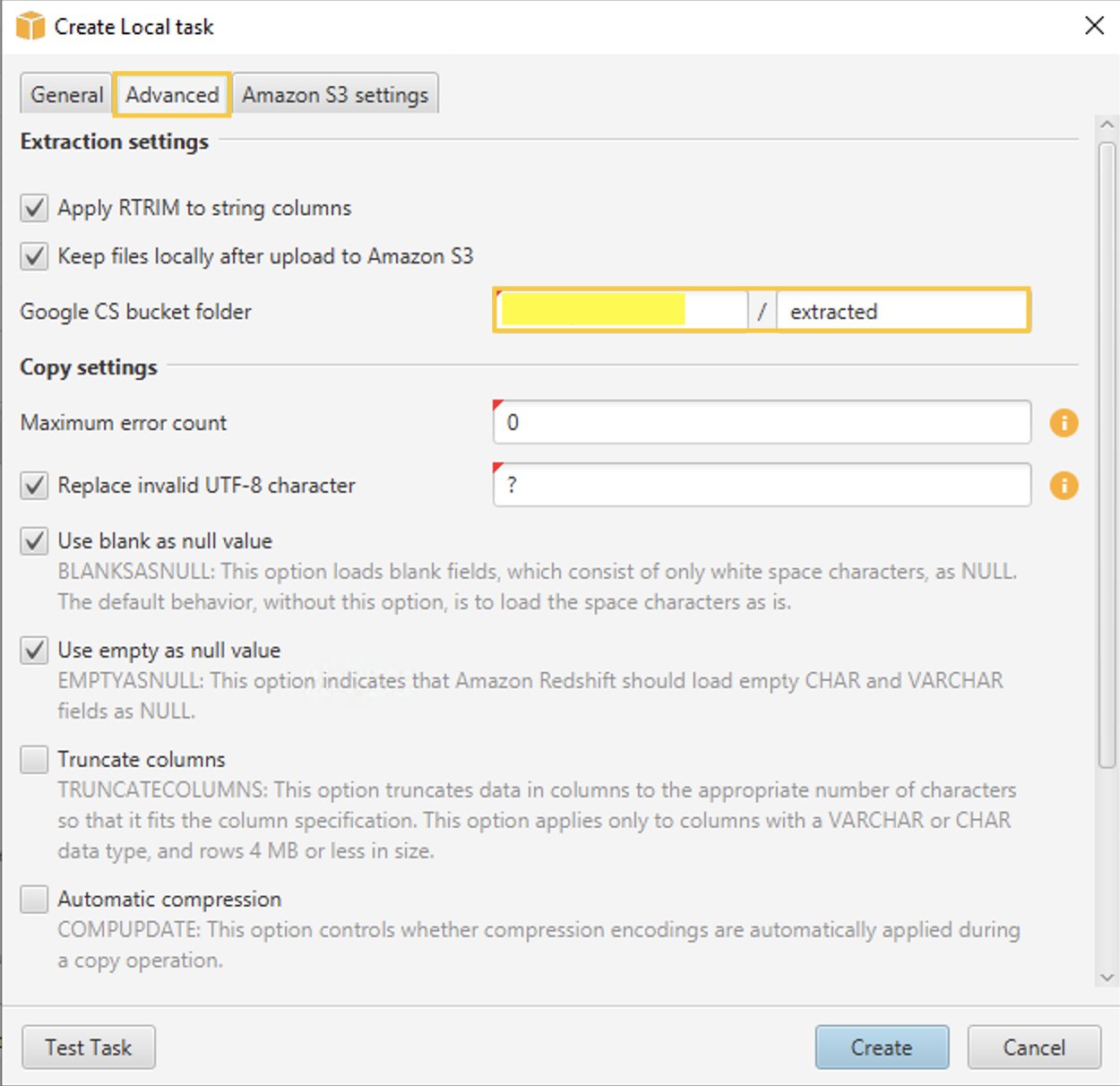

- In the Advanced tab, for Google CS bucket folder enter the Google Cloud Storage bucket/folder that you created earlier in the GCP Management Console. AWS SCT stores the extracted data in this location.

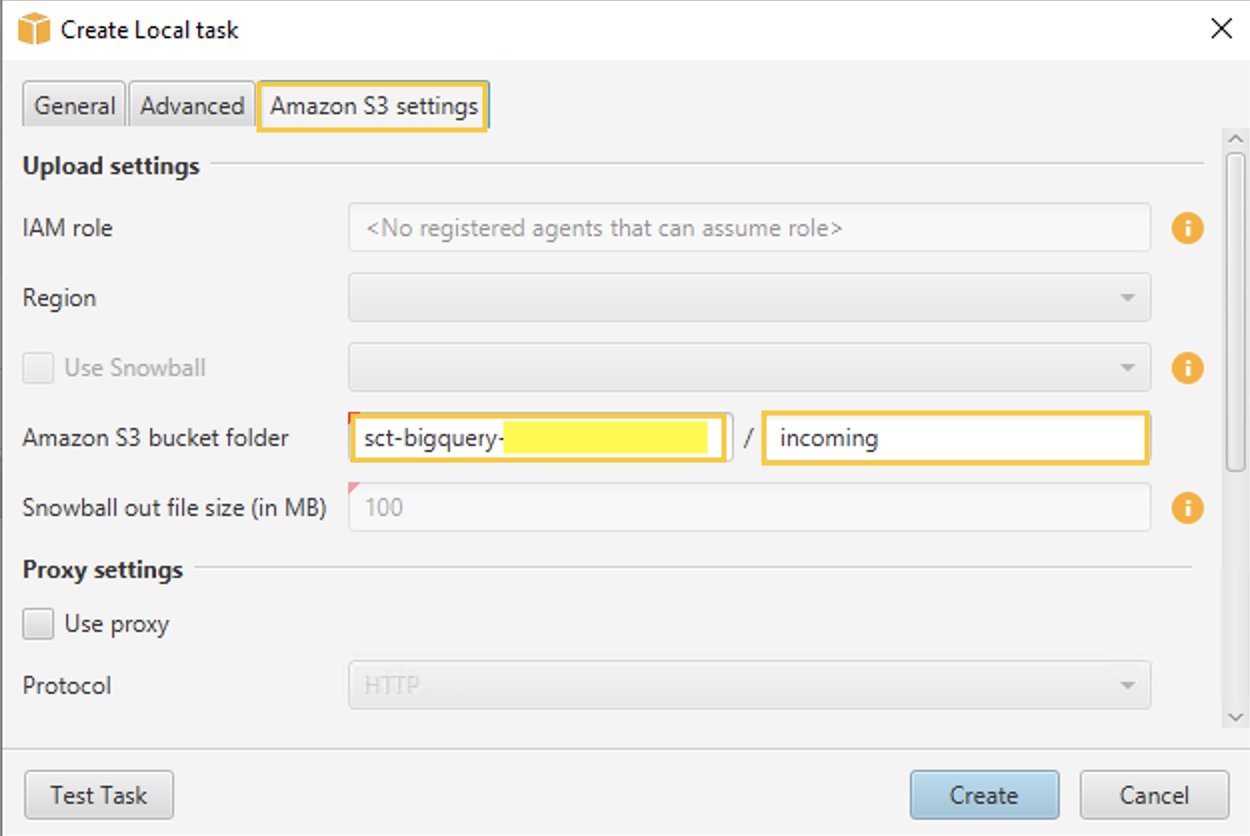

- In the Amazon S3 Settings tab, for Amazon S3 bucket folder, provide the bucket and folder names of the S3 bucket that you created earlier. The AWS SCT data extraction agent uploads the data into the S3 bucket/folder before copying to Amazon Redshift.

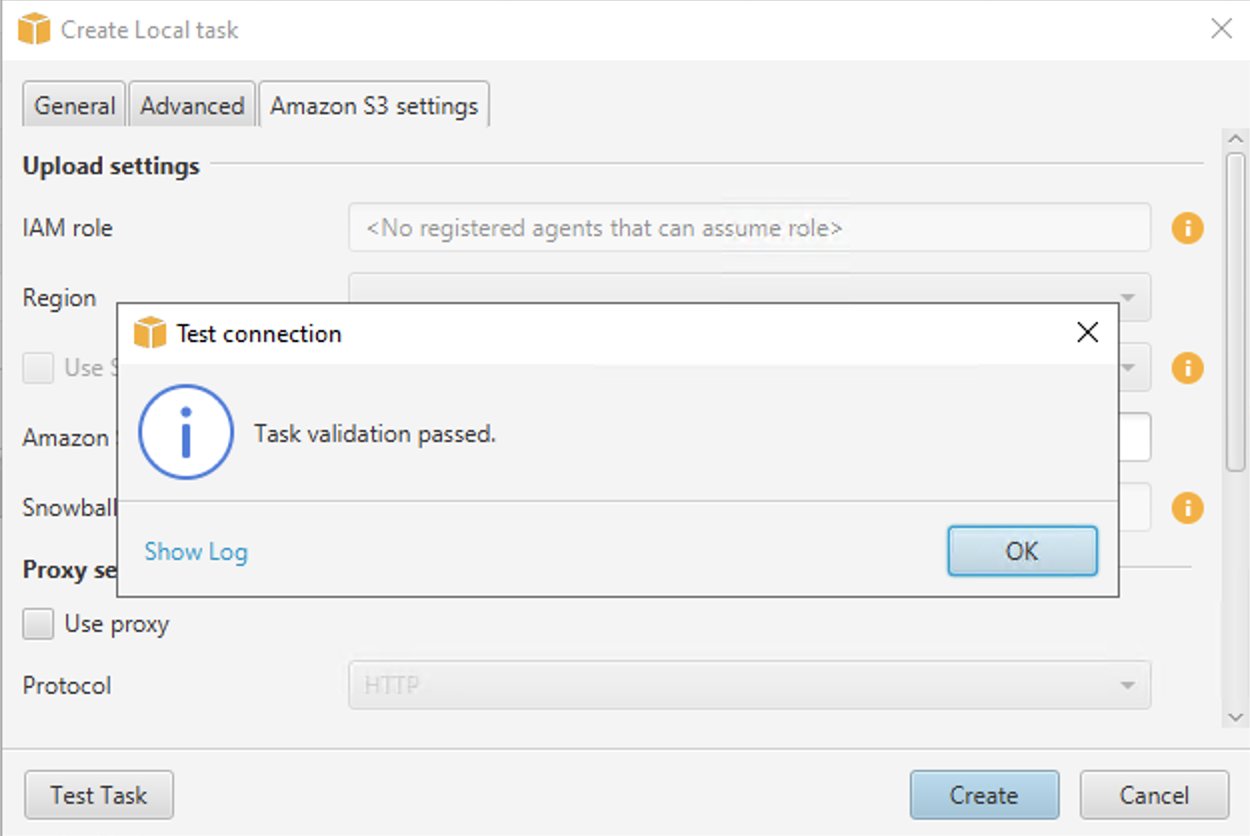

- Choose Test Task.

- Once the task is successfully validated, choose Create.

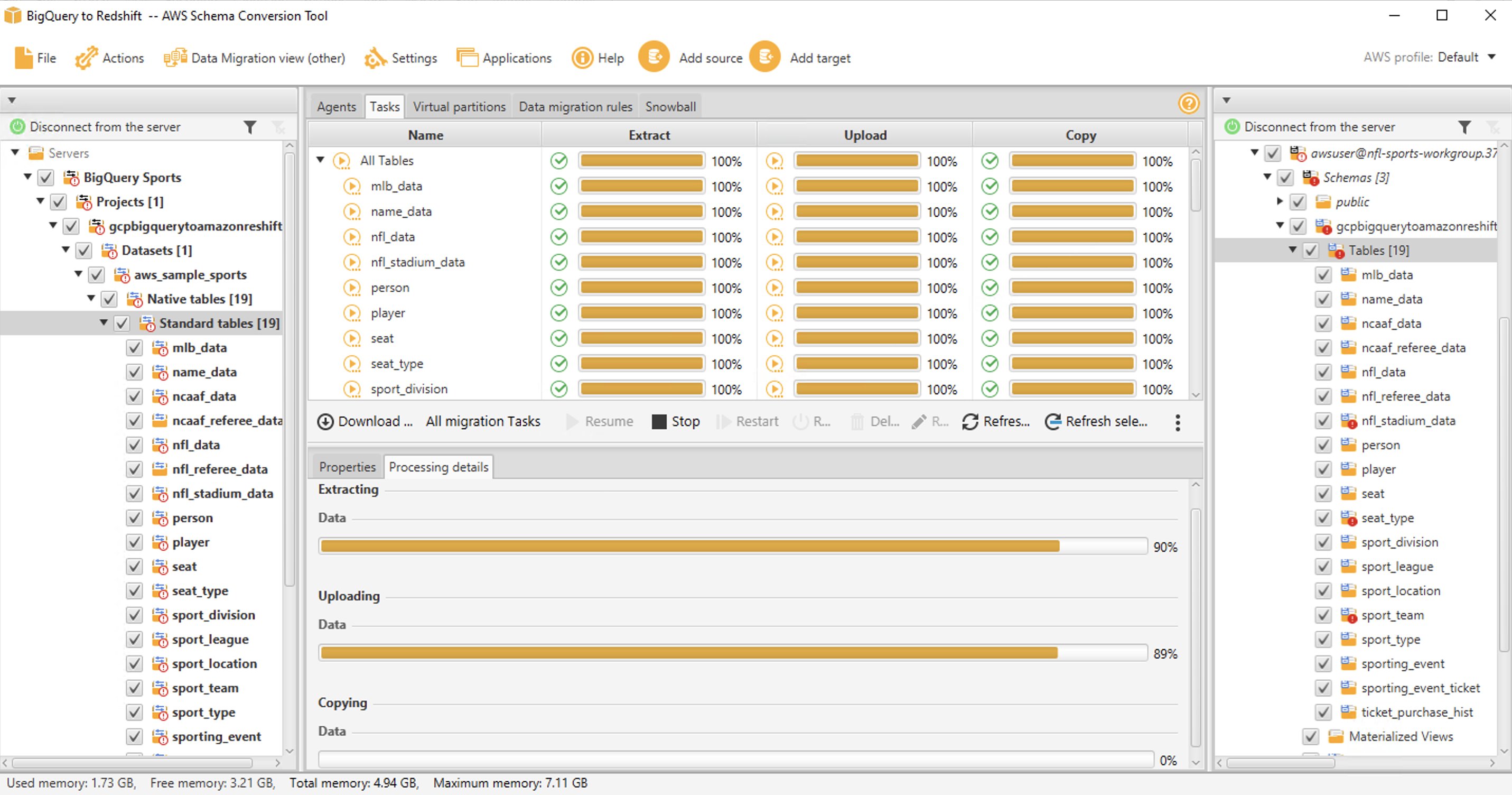

Start the Local Data Migration Task

To start the task, choose the Start button in the Tasks tab.

- First, the Data Extraction Agent extracts data from BigQuery into the GCP storage bucket.

- Then, the agent uploads data to Amazon S3 and launches a copy command to move the data to Amazon Redshift.

- At this point, AWS SCT has successfully migrated data from the source BigQuery table to the Amazon Redshift table.

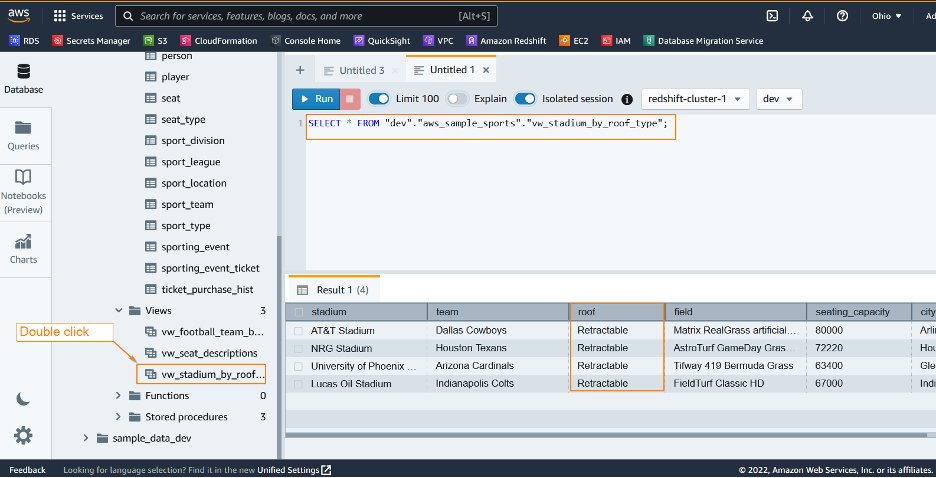

View data in Amazon Redshift

After the data migration task executes successfully, you can connect to Amazon Redshift and validate the data.

Follow these steps to validate the data in Amazon Redshift:

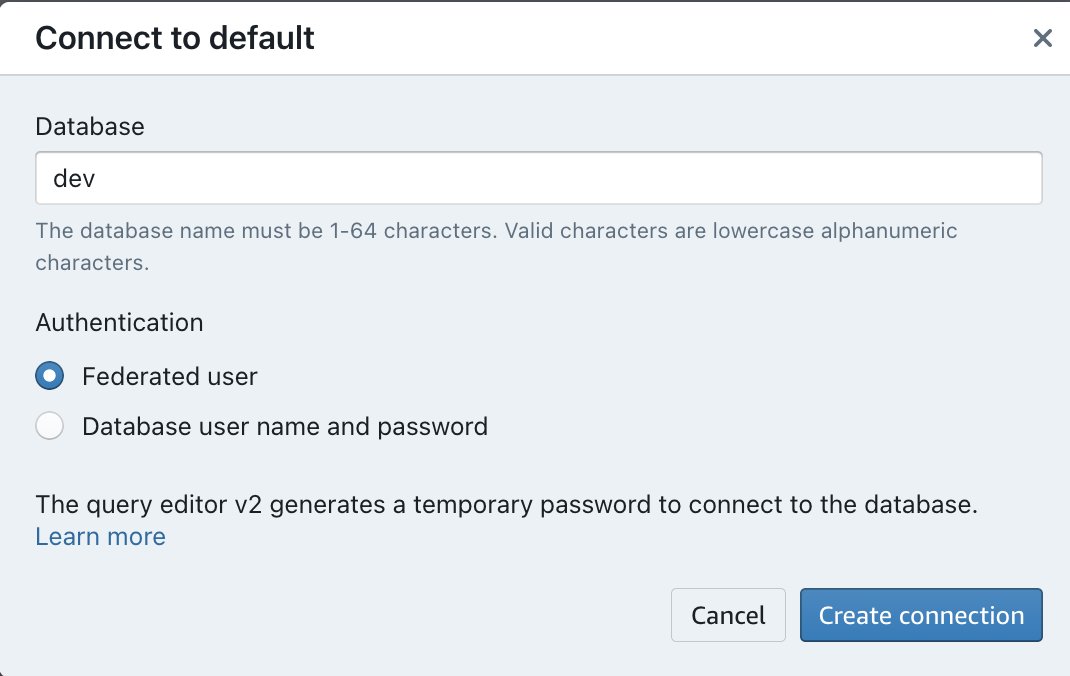

- Navigate to the Amazon Redshift QueryEditor V2.

- Double-click on the Amazon Redshift Serverless workgroup name that you created.

- Choose the Federated User option under Authentication.

- Choose Create Connection.

- Create a new editor by choosing the + icon.

- In the editor, write a query to select from the schema name and table name/view name you would like to verify. Explore the data, run ad-hoc queries, and make visualizations and charts and views.

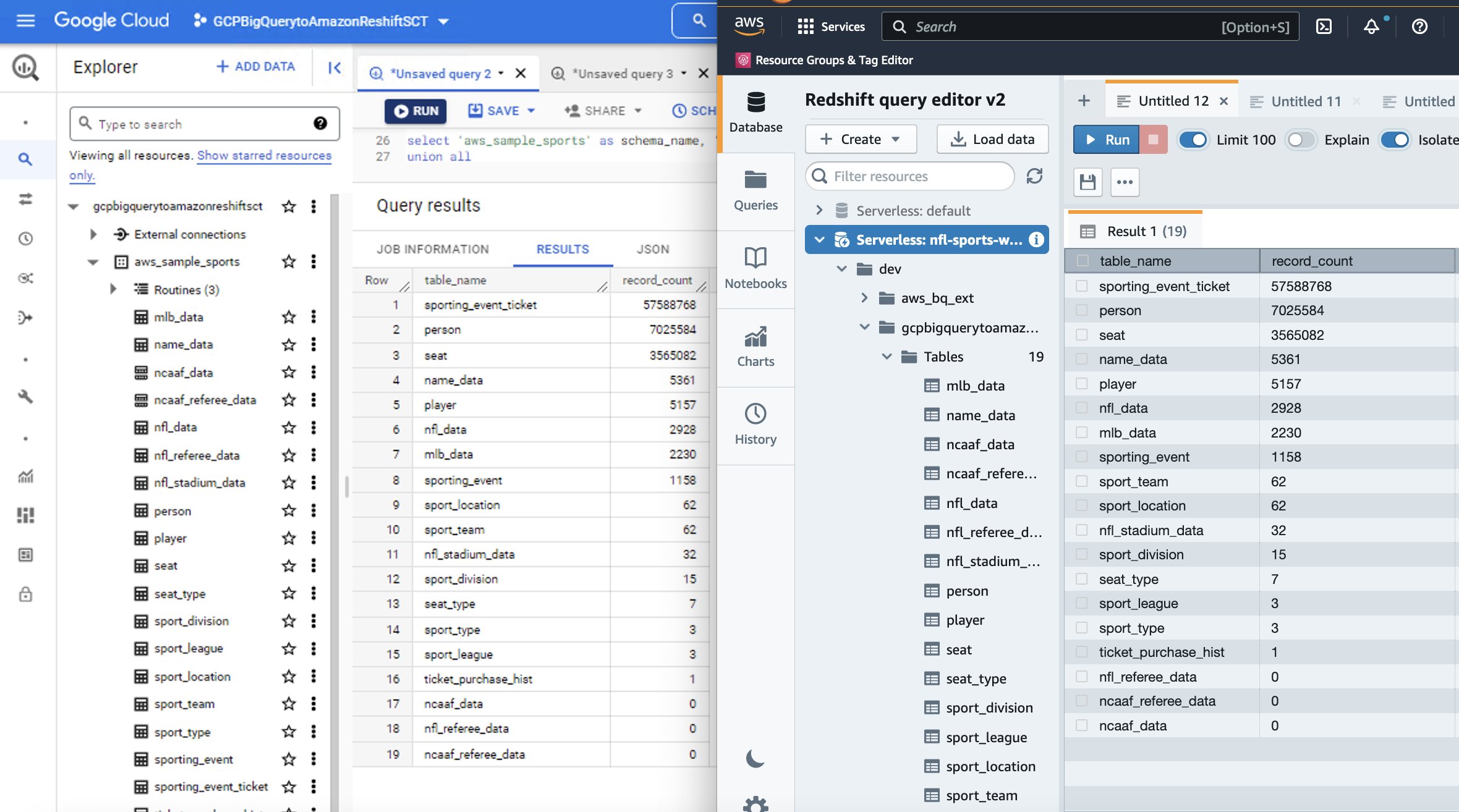

The following is a side-by-side comparison between source BigQuery and target Amazon Redshift for the sports data-set that we used in this walkthrough.

Clean up up any AWS resources that you created for this exercise

Follow these steps to terminate the EC2 instance:

- Navigate to the Amazon EC2 console.

- In the navigation pane, choose Instances.

- Select the check-box for the EC2 instance that you created.

- Choose Instance state, and then Terminate instance.

- Choose Terminate when prompted for confirmation.

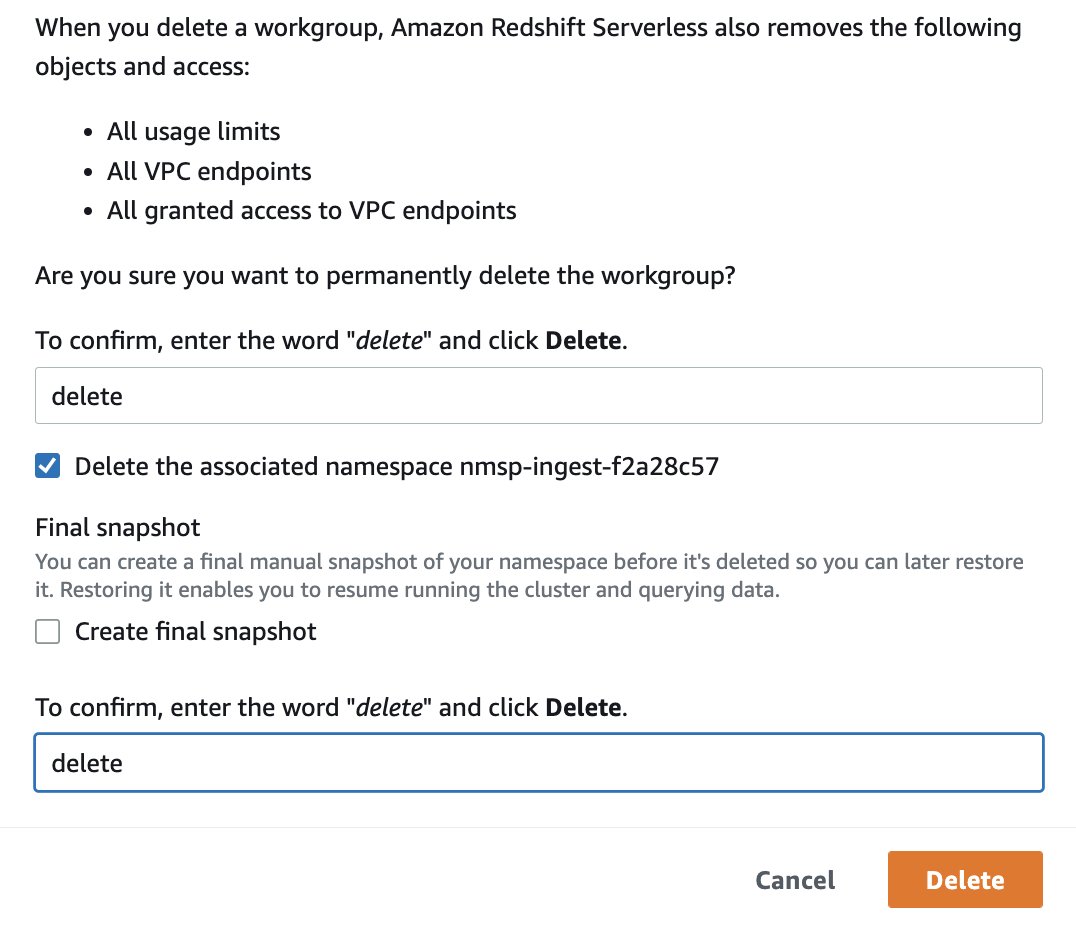

Follow these steps to delete Amazon Redshift Serverless workgroup and namespace

- Navigate to Amazon Redshift Serverless Dashboard.

- Under Namespaces / Workgroups, choose the workspace that you created.

- Under Actions, choose Delete workgroup.

- Select the checkbox Delete the associated namespace.

- Uncheck Create final snapshot.

- Enter delete in the delete confirmation text box and choose Delete.

Follow these steps to delete the S3 bucket

- Navigate to Amazon S3 console.

- Choose the bucket that you created.

- Choose Delete.

- To confirm deletion, enter the name of the bucket in the text input field.

- Choose Delete bucket.

Conclusion

Migrating a data warehouse can be a challenging, complex, and yet rewarding project. AWS SCT reduces the complexity of data warehouse migrations. Following this walkthrough, you can understand how a data migration task extracts, downloads, and then migrates data from BigQuery to Amazon Redshift. The solution that we presented in this post performs a one-time migration of database objects and data. Data changes made in BigQuery when the migration is in progress won’t be reflected in Amazon Redshift. When data migration is in progress, put your ETL jobs to BigQuery on hold or replay the ETLs by pointing to Amazon Redshift after the migration. Consider using the best practices for AWS SCT.

AWS SCT has some limitations when using BigQuery as a source. For example, AWS SCT can’t convert sub queries in analytic functions, geography functions, statistical aggregate functions, and so on. Find the full list of limitations in the AWS SCT user guide. We plan to address these limitations in future releases. Despite these limitations, you can use AWS SCT to automatically convert most of your BigQuery code and storage objects.

Download and install AWS SCT, sign in to the AWS Console, checkout Amazon Redshift Serverless, and start migrating!

About the authors

Cedrick Hoodye is a Solutions Architect with a focus on database migrations using the AWS Database Migration Service (DMS) and the AWS Schema Conversion Tool (SCT) at AWS. He works on DB migrations related challenges. He works closely with EdTech, Energy, and ISV business sector customers to help them realize the true potential of DMS service. He has helped migrate 100s of databases into the AWS cloud using DMS and SCT.

Cedrick Hoodye is a Solutions Architect with a focus on database migrations using the AWS Database Migration Service (DMS) and the AWS Schema Conversion Tool (SCT) at AWS. He works on DB migrations related challenges. He works closely with EdTech, Energy, and ISV business sector customers to help them realize the true potential of DMS service. He has helped migrate 100s of databases into the AWS cloud using DMS and SCT.

Amit Arora is a Solutions Architect with a focus on Database and Analytics at AWS. He works with our Financial Technology and Global Energy customers and AWS certified partners to provide technical assistance and design customer solutions on cloud migration projects, helping customers migrate and modernize their existing databases to the AWS Cloud.

Amit Arora is a Solutions Architect with a focus on Database and Analytics at AWS. He works with our Financial Technology and Global Energy customers and AWS certified partners to provide technical assistance and design customer solutions on cloud migration projects, helping customers migrate and modernize their existing databases to the AWS Cloud.

Jagadish Kumar is an Analytics Specialist Solution Architect at AWS focused on Amazon Redshift. He is deeply passionate about Data Architecture and helps customers build analytics solutions at scale on AWS.

Jagadish Kumar is an Analytics Specialist Solution Architect at AWS focused on Amazon Redshift. He is deeply passionate about Data Architecture and helps customers build analytics solutions at scale on AWS.

Anusha Challa is a Senior Analytics Specialist Solution Architect at AWS focused on Amazon Redshift. She has helped many customers build large-scale data warehouse solutions in the cloud and on premises. Anusha is passionate about data analytics and data science and enabling customers achieve success with their large-scale data projects.

Anusha Challa is a Senior Analytics Specialist Solution Architect at AWS focused on Amazon Redshift. She has helped many customers build large-scale data warehouse solutions in the cloud and on premises. Anusha is passionate about data analytics and data science and enabling customers achieve success with their large-scale data projects.