Generative Artificial Intelligence (AI) represents a cutting-edge frontier in the field of machine learning and AI. Unlike traditional AI models focused on interpretation and analysis, generative AI is designed to create new content and generate novel data outputs. This includes the synthesis of images, text, sound, and other digital media, often mimicking human-like creativity and intelligence. By leveraging complex algorithms and neural networks, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), generative AI can produce original, realistic content, often indistinguishable from human-generated work.

In the era of digital transformation, data privacy has emerged as a pivotal concern. As AI technologies, especially generative AI, heavily rely on vast datasets for training and functioning, the safeguarding of personal and sensitive information is paramount. The intersection of generative AI and data privacy raises significant questions: How is data being used? Can individuals’ privacy be compromised? What measures are in place to prevent misuse? The importance of addressing these questions lies not only in ethical compliance but also in maintaining public trust in AI technologies.

This article aims to delve into the intricate relationship between generative AI and data privacy. It seeks to illuminate the challenges posed by the integration of these two domains, exploring how generative AI impacts data privacy and vice versa. By examining the current landscape, including the technological challenges, ethical considerations, and regulatory frameworks, this article endeavors to provide a comprehensive understanding of the subject. Additionally, it will highlight potential solutions and future directions, offering insights for researchers, practitioners, and policymakers in the field. The scope of this discussion extends from technical aspects of AI models to broader societal and legal implications, ensuring a holistic view of the generative AI–data privacy nexus.

The Intersection of Generative AI and Data Privacy

Generative AI functions by learning from large datasets to create new, original content. This process involves training AI models, such as GANs or VAEs, on extensive data sets. These models consist of two parts: the generator, which creates content, and the discriminator, which evaluates it. Through iterative processes, the generator learns to produce increasingly realistic outputs that can fool the discriminator. This ability to generate new data points from existing data sets is what sets generative AI apart from other AI technologies.

Data is the cornerstone of any generative AI system. The quality and quantity of the data used in training directly influence the model’s performance and the authenticity of its outputs. These models require diverse and comprehensive datasets to learn and mimic patterns accurately. The data can range from text and images to more complex data types like biometric information, depending on the application.

The data privacy concerns in AI include:

- Data Collection and Usage: The collection of large datasets for training generative AI raises concerns about how data is sourced and used. Issues such as informed consent, data ownership, and the ethical use of personal information are central to this discussion.

- Potential for Data Breaches: With large repositories of sensitive information, generative AI systems can become targets for cyberattacks, leading to potential data breaches. Such breaches could result in the unauthorized use of personal data and significant privacy violations.

- Privacy of Individuals in Training Datasets: Ensuring the anonymity of individuals whose data is used in training sets is a major concern. There is a risk that generative AI could inadvertently reveal personal information or be used to recreate identifiable data, posing a threat to individual privacy.

Understanding these aspects is crucial for addressing the privacy challenges associated with generative AI. The balance between leveraging data for technological advancement and protecting individual privacy rights remains a key issue in this field. As generative AI continues to evolve, the strategies for managing data privacy must also adapt, ensuring that technological progress does not come at the expense of personal privacy.

Challenges in Data Privacy with Generative AI

Anonymity and Reidentification Risks

One of the primary challenges in the realm of generative AI is maintaining the anonymity of individuals whose data is used in training models. Despite efforts to anonymize data, there is an inherent risk of reidentification. Advanced AI models can unintentionally learn and replicate unique, identifiable patterns present in the training data. This situation poses a significant threat, as it can expose personal information, undermining efforts to protect individual identities.

Unintended Data Leakage in AI Models

Data leakage refers to the unintentional exposure of sensitive information through AI models. Generative AI, due to its ability to synthesize realistic data based on its training, can inadvertently reveal confidential information. For example, a model trained on medical records might generate outputs that closely resemble real patient data, thus breaching confidentiality. This leakage is not always a result of direct data exposure but can occur through the replication of detailed patterns or information inherent in the training data.

Ethical Dilemmas in Data Usage

The use of generative AI introduces complex ethical dilemmas, particularly regarding the consent and awareness of individuals whose data is used. Questions arise about the ownership of data and the ethical implications of using personal information to train AI models without explicit consent. These dilemmas are compounded when considering data sourced from publicly available datasets or social media, where the original context and consent for data use might be unclear.

Compliance with Global Data Privacy Laws

Navigating the varying data privacy laws across different jurisdictions presents another challenge for generative AI. Laws such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States set stringent requirements for data handling and user consent. Ensuring compliance with these laws, especially for AI models used across multiple regions, requires careful consideration and adaptation of data practices.

Each of these challenges underscores the complexity of managing data privacy in the context of generative AI. Addressing these issues necessitates a multifaceted approach, involving technological solutions, ethical considerations, and regulatory compliance. As generative AI continues to advance, it is imperative that these privacy challenges are met with robust and evolving strategies to safeguard individual privacy and maintain trust in AI technologies.

Technological and Regulatory Solutions

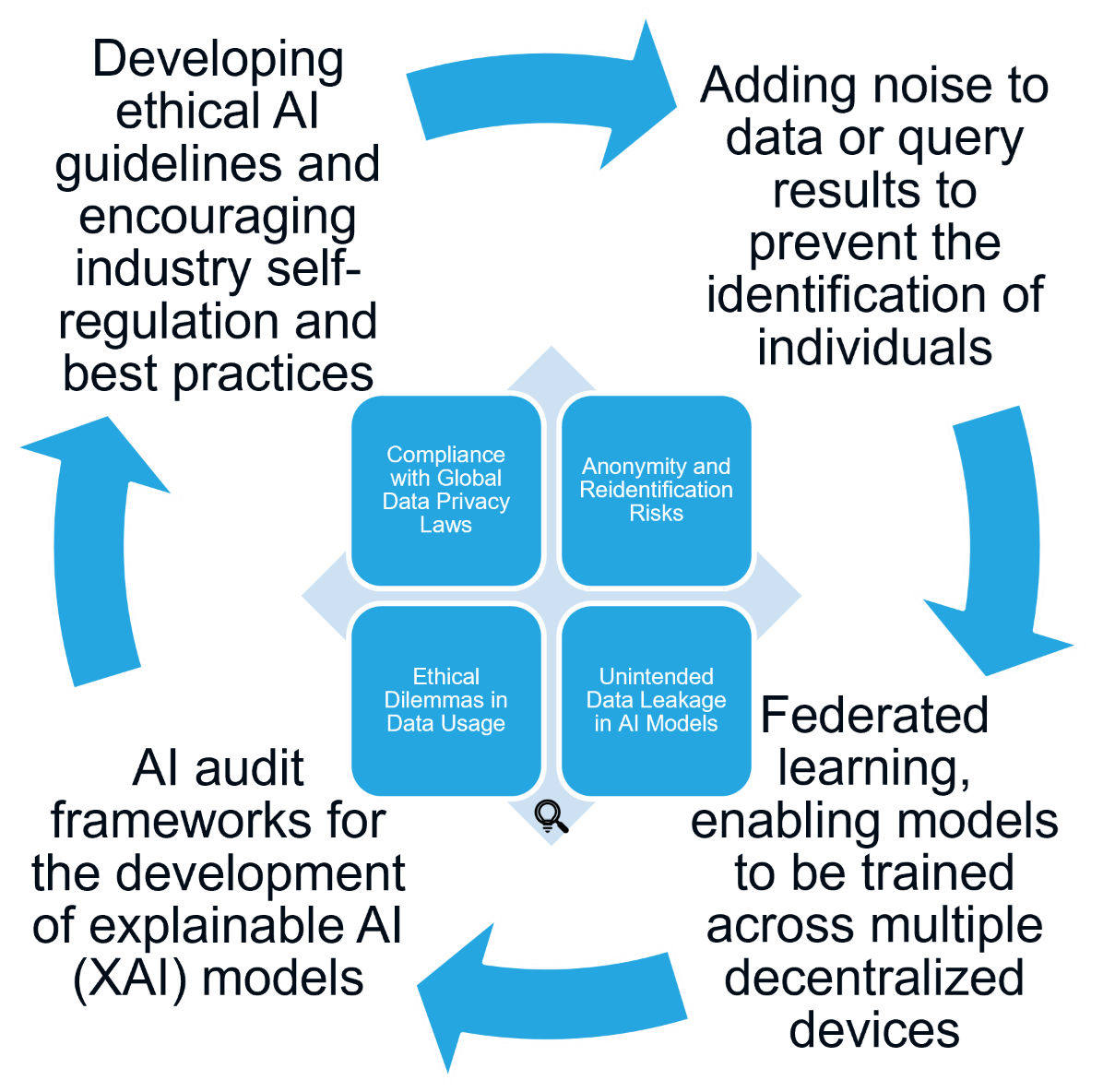

In the domain of generative AI, a range of technological solutions are being explored to address data privacy challenges. Among these, differential privacy stands out as a key technique, as illustrated in Figure 1. It involves adding noise to data or query results to prevent the identification of individuals, thereby allowing the use of data in AI applications while ensuring privacy. Another innovative approach is federated learning, which enables models to be trained across multiple decentralized devices or servers holding local data samples. This method ensures that sensitive data remains on the user’s device, enhancing privacy. Additionally, homomorphic encryption is gaining attention as it allows for computations to be performed on encrypted data. This means AI models can learn from data without accessing it in its raw form, offering a new level of security.

Figure 1. Data Privacy Solutioning in Generative AI

The regulatory landscape is also evolving to keep pace with these technological advancements. AI auditing and transparency tools are becoming increasingly important. AI audit frameworks help in assessing and documenting data usage, model decisions, and potential biases in AI systems, ensuring accountability and transparency. Furthermore, the development of explainable AI (XAI) models is crucial for building trust in AI systems. These models provide insights into how and why decisions are made, especially in sensitive applications.

Legislation and policy play a critical role in safeguarding data privacy in the context of generative AI. Updating and adapting existing privacy laws, like the GDPR and CCPA, to address the unique challenges posed by generative AI is essential. This involves clarifying rules around AI data usage, consent, and data subject rights. Moreover, there is a growing need for AI-specific regulations that address the nuances of AI technology, including data handling, bias mitigation, and transparency requirements. The establishment of international collaboration and standards is critical due to the global nature of AI. This collaboration is key in establishing a common framework for data privacy in AI, facilitating cross-border cooperation and compliance.

Lastly, developing ethical AI guidelines and encouraging industry self-regulation and best practices are pivotal. Institutions and organizations can develop ethical guidelines for AI development and usage, focusing on privacy, fairness, and accountability. Such self-regulation within the AI industry, along with the adoption of best practices for data privacy, can significantly contribute to the responsible development of AI technologies.

Future Directions and Opportunities

In the realm of privacy-preserving AI technologies, the future is rich with potential for innovations. One key area of focus is the development of more sophisticated data anonymization methods. These methods aim to ensure the privacy of individuals while maintaining the utility of data for AI training, striking a balance that is crucial for ethical AI development. Alongside this, the exploration of advanced encryption techniques, including cutting-edge approaches like quantum encryption, is gaining momentum. These methods promise to provide more robust safeguards for data used in AI systems, enhancing security against potential breaches.

Another promising avenue is the exploration of decentralized data architectures. Technologies like blockchain offer new ways to manage and secure data in AI applications. They bring the benefits of increased transparency and traceability, which are vital in building trust and accountability in AI systems.

As AI technology progresses, it will inevitably interact with new and more complex types of data, such as biometric and behavioral information. This progression calls for a proactive approach in anticipating and preparing for the privacy implications of these evolving data types. The development of global data privacy standards becomes essential in this context. Such standards need to address the unique challenges posed by AI and the international nature of data and technology, ensuring a harmonized approach to data privacy across borders.

AI applications in sensitive domains like healthcare and finance warrant special attention. In these areas, privacy concerns are especially pronounced due to the highly personal nature of the data involved. Ensuring the ethical use of AI in these domains is not just a technological challenge but a societal imperative.

The collaboration between technology, legal, and policy sectors is crucial in navigating these challenges. Encouraging interdisciplinary research that brings together experts from various fields is key to developing comprehensive and effective solutions. Public-private partnerships are also vital, promoting the sharing of best practices, resources, and knowledge in the AI and privacy field. Furthermore, implementing educational and awareness campaigns is important to inform the public and policymakers about the benefits and risks of AI. These campaigns emphasize the importance of data privacy, helping to foster a well-informed dialogue about the future of AI and its role in society.

Conclusion

The integration of generative AI with robust data privacy measures presents a dynamic and evolving challenge. The future landscape will be shaped by technological advancements, regulatory changes, and the continuous need to balance innovation with ethical considerations. The field can navigate these challenges by fostering collaboration, adapting to emerging risks, and prioritizing privacy and transparency. As AI continues to permeate various aspects of life, ensuring its responsible and privacy-conscious development is imperative for its sustainable and beneficial integration into society.

The post The Ethical Algorithm: Balancing AI Innovation with Data Privacy appeared first on Datafloq.